Teachers have long gotten guidance that we should make our learning objectives explicit to our students.

The formula goes something like this: “By the end of the lesson, you will be able to [know and do these several things].”

I’ve long been skeptical about this guidance — in part because such formulas feel forced and unnatural to me. I’m an actor, but I just don’t think I can deliver those lines convincingly.

The last time I asked for research support behind this advice, a friend pointed me to research touting its benefits. Alas, that research relied on student reports of their learning. Sadly, in the past, such reports haven’t been a reliable guide to actual learning.

For that reason, I was delighted to find a new study on the topic.

I was especially happy to see this research come from Dr. Faria Sana, whose work on laptop multitasking has (rightly) gotten so much love. (Whenever I talk with teachers about attention, I share this study.)

Strangely, I like research that challenges my beliefs. I’m especially likely to learn something useful and new when I explore it. So: am I a convert?

Take 1; Take 2

Working with college students in a psychology course, Sana’s team started with the basics.

In her first experiment, she had students read five short passages about mirror neurons.

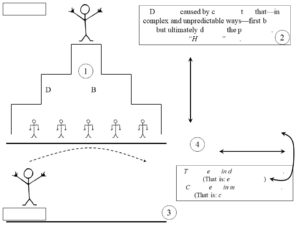

Group 1 read no learning objectives.

Group 2 read three learning objectives at the beginning of each passage.

And, Group 3 read all fifteen learning objectives at the beginning of the first passage.

The results?

Both groups that read the learning objectives scored better than the group that didn’t. (Group 2, with the learning objectives spread out, learned a bit more than Group 3, with the objectives all bunched together — but the differences weren’t large enough to reach statistical significance.)

So: compared to doing nothing, starting with learning objectives increased learning of these five paragraphs.

But: what about compared to doing a plausible something else? Starting with learning objectives might be better than starting cold. Are they better than other options?

How about activating prior knowledge? Should we try some retrieval practice? How about a few minutes of mindful breathing?

Sana’s team investigated that question. In particular — in their second experiment — they combined learning objectives with research into pretesting.

As I’ve written before, Dr. Lindsay Richland‘s splendid study shows that “pretesting” — asking students questions about an upcoming reading passage, even though they don’t know the answers yet — yields great results. (Such a helpfully counter-intuitive suggestion!)

So, Team Sana wanted to know: what happens if we present learning objectives as questions rather than as statements? Instead of reading

“In the first passage, you will learn about where the mirror neurons are located.”

Students had to answer this question:

“Where are the mirror neurons located?” (Note: the students hadn’t read the passage yet, so it’s unlikely they would know. Only 38% of these questions were answered correctly.)

Are learning objectives more effective as statements or as pretests?

The Envelope Please

Pretests. By a lot.

On the final test — with application questions, not simple recall questions — students who read learning-objectives-as-statements got 53% correct.

Students who answered learning-objectives-as-pretest-questions got 67% correct. (For the stats minded, Cohen’s d was 0.84! That’s HUGE!)

So: traditional learning objectives might be better than nothing, but they’re not nearly as helpful as learning-objectives-as-pretests.

This finding prompts me to speculate. (Alert: I’m shifting from research-based conclusions to research-&-experience-informed musings.)

First: Agarwal and Bain describe retrieval practice this way: “Don’t ask students to put information into their brains (by, say, rereading). Instead, ask students to pull information out of their brains (by trying to remember).”

As I see it, traditional learning objectives feel like review: “put this information into your brain.”

Learning-objectives-as-pretests feel like retrieval practice: “try to take information back out of your brain.” We suspect students won’t be successful in these retrieval attempts, because they haven’t learned the material yet. But, they’re actively trying to recall, not trying to encode.

Second: even more speculatively, I suspect many kinds of active thinking will be more effective than a cold start (as learning objectives were in Study 1 above). And, I suspect that many kinds of active thinking will be more effective that a recital of learning objectives (as pretests were in Study 2).

In other words: am I a convert to listing learning objectives (as traditionally recommended)? No.

I simply don’t think Sana’s research encourages us to follow that strategy.

Instead, I think it encourages us to begin classes with some mental questing. Pretests help in Sana’s studies. I suspect other kinds of retrieval practice would help. Maybe asking students to solve a relevant problem or puzzle would help.

Whichever approach we use, I suspect that inviting students to think will have a greater benefit than teachers’ telling them what they’ll be thinking about.

Three Final Points

I should note three ways that this research might NOT support my conclusions.

First: this research was done with college students. Will objectives-as-pretests work with 3rd graders? I don’t know.

Second: this research paradigm included a very high ratio of objectives to material. Students read, in effect, one learning objective for every 75 words in a reading passage. Translated into a regular class, that’s a HUGE number of learning objectives.

Third: does this research about reading passages translate to classroom discussions and activities? I don’t know.

Here’s what I do know. In these three studies, Sana’s students remembered more when they started reading with unanswered questions in mind. That insight offers teachers a inspiring prompt for thinking about our daily classroom work.

![Prior Knowledge: Building the Right Floor [Updated]](https://www.learningandthebrain.com/blog/wp-content/uploads/2019/10/AdobeStock_227486358_Credit-1024x631.jpg)