Our story begins with a surprise and an exclamation point.

Back in 1968, researchers Rosenthal and Jacobsen wanted to know if teachers’ expectations shaped students’ academic performance and development. To explore this question, they worked with students and teachers at “Oak School.”

- First, they gave 320 students in grades 1-6 an IQ test.

- Then, they told the teachers that 20% of those students had been identified as “late bloomers.” The teachers should expect dramatic academic and intellectual improvement over the school year. In this way, researchers raised the teachers’ academic expectations for these students.

- Here’s the surprise: those 65 “late bloomers” had been randomly selected. Realistically, there was no reason to expect any more progress from these students than from anyone else.

- And here’s the exclamation point: at year’s end, when Rosenthal and Jacobsen remeasured IQ , those late bloomers saw an astonishing increase in IQ! (In some places, they reported that these students got up to a 24.8 point bump in IQ!!!)

From these data, Rosenthal and Jacobsen concluded that teachers’ expectations have remarkable power to shape student outcomes. It seems that our expectations have so much influence, we can even increase our students’ IQs by believing in them and communicating our belief and support.

Under the name of the “Pygmalion Effect,” this finding has had an enormous effect in education. Many (most?) teachers have heard some version of the claim that “teacher expectations transform student capabilities.”

Too Good to Be True?

I’ve seen several enthusiastic references to the Pygmalion effect recently, so I thought it would be helpful to review this research pool.

A quick review of the 1968 study raises compelling concerns.

First: all of the extra IQ gains came for the first and second graders. Even if we accept the study’s data — more on this point in a moment — then teachers’ expectations benefit 6-8 year olds, but not older students. That limitation alone makes the Pygmalion Effect much less dazzling.

Second: one of the first grade classes in this study tested bizarrely low on their initial IQ test. (In the ugly language of the time, they would have been classified as “mentally deficient.”) The likely explanation is therefore NOT that teacher expectations raised IQ, but that an inaccurate initial IQ measurement was more accurately measured by a later test result.

Third: the numbers get squishy. While one report describes the “24.8 point increase” quoted above, others focus on a FOUR point increase. If reported results differ by 600%, we should hesitate to give our confidence to a study. (Honestly, the fact that they claim that IQ went up 24.8 points in a year itself makes confidence difficult to give.)

Fourth: as far as I can tell, researchers measured only one variable: IQ. I’m surprised they didn’t measure — say — academic progress, or grades, or standardized test scores, or other academic measures.

In brief, even the most basic questions throw the Pygmalion Effect claims into doubt.

What’s Happened in the Last 50 Years?

If a study from 1968 doesn’t offer persuasive guidance, what about subsequent research? Honestly, we’ve got an ENORMOUS amount. Rather than summarize all of it — an impossible task for a blog post — I’ll focus on a few key themes.

I’m relying on two scholarly articles to find these themes:

- a 2005 meta-analysis by Lee Jussim and Kent Harber, and

- a 2018 narrative review by Wang, Rubie-Davies, and Meissel.

First, to quote the Jussim meta-analysis:

Self-fulfilling prophecies in the classroom do occur, but these effects are typically small, [and] they do not accumulate greatly across perceivers or over time.

That is: the Pygmalion Effect isn’t nothing, but it’s not remotely as simple or robust as commonly asserted or implied.

Second, as noted in the Wang review, the field suffers from real problems with methodology. For instance, when looking at the effect that teachers’ expectations have on academic outcomes, Wang’s team found that 40% of the studies didn’t consider the students’ baseline academic achievement. Without knowing where the students began, it hardly seems plausible to make strong claims about how much progress they made.

Third — back to Jussim:

teacher expectations may predict student outcomes more because these expectations are accurate than because they are self-fulfilling.

If I struggled to learn German in high school, my college instructor might reasonably predict that learning Finnish will be a challenge for me. In this case, it’s likely that my teacher’s expectations didn’t limit my progress; instead, my difficulty with learning another foreign language influenced my teacher’s expectations.

Fourth: in some cases, negative expectations can demonstrably slow student progress. This research field has its own literature and terminology, so I won’t explore it here.

Getting Beyond the Myths

To be clear, I think teachers SHOULD have high expectations of our students — and we should let them know that we do.

However, I don’t think that high expectations are enough. For that matter, I don’t think that any 1-step, uplifting strategy is enough. Whether we’re talking growth mindset posters or SEL seminars or an hour of coding, no one easy thing will offer dramatic benefits to most students.

After all, if such an easy, one-step solution existed, teachers would have figured it out on our own.

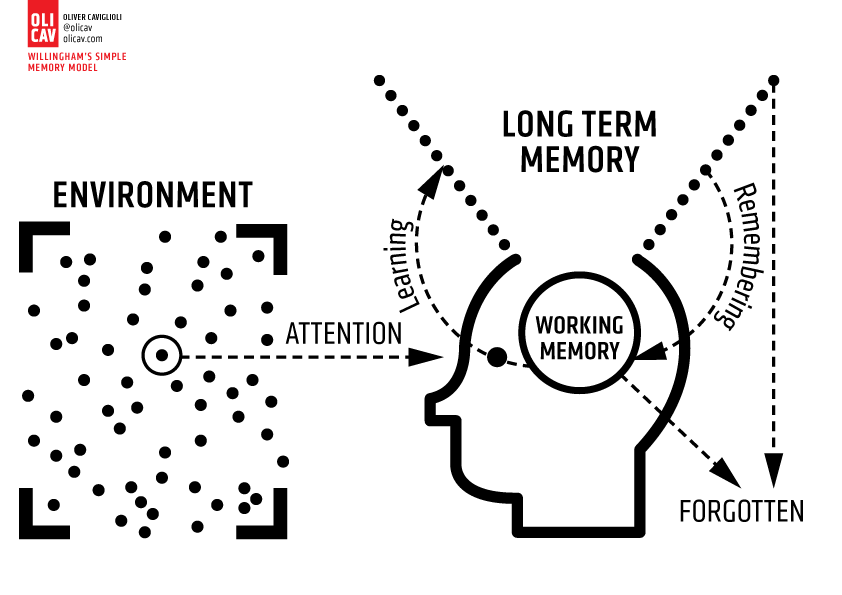

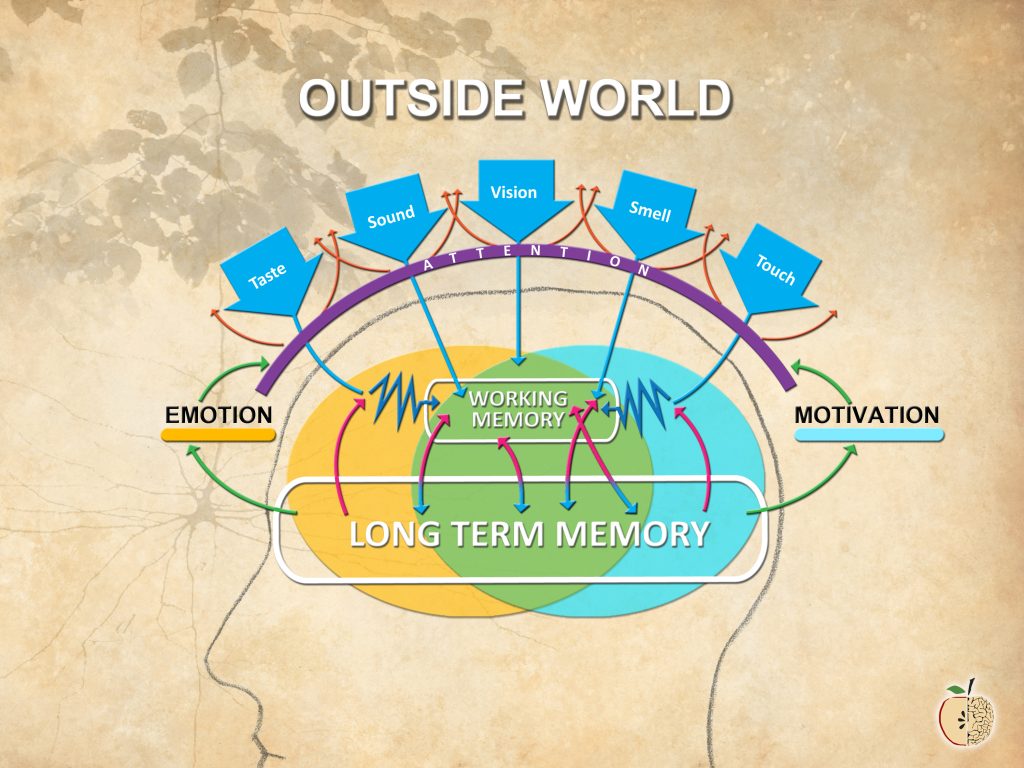

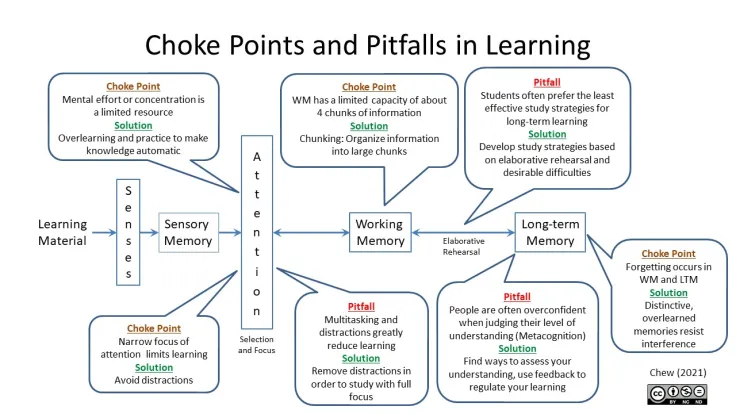

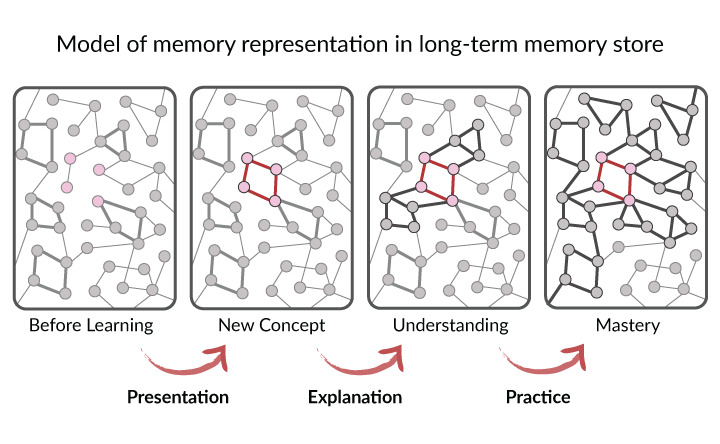

Instead, we need to think about our instruction as a complex web of small but meaningful improvements: working-memory management here, fostering attention there; enhancing belonging with this ongoing strategy, establishing structure and routine with that ongoing strategy.

Classrooms won’t be transformed by one simple act. They will come to life when step-by-step, day after day, we use cognitive science to guide our planning.

Jussim, L., & Harber, K. D. (2005). Teacher expectations and self-fulfilling prophecies: Knowns and unknowns, resolved and unresolved controversies. Personality and social psychology review, 9(2), 131-155.

Wang, S., Rubie-Davies, C. M., & Meissel, K. (2018). A systematic review of the teacher expectation literature over the past 30 years. Educational Research and Evaluation, 24(3-5), 124-179.