Every teacher knows: students won’t learn much if they don’t pay attention. How can we help them do so? (In my experience, shouting “pay attention!” over and over doesn’t work very well…)

So, what else can we do?

As is so often the case, I think “what should we do?” isn’t exactly the right question.

Instead, we teachers should ask: “how should we THINK ABOUT what we do?”

When we have good answers to the “how-do-we-think?” question, we can apply those thought processes to our own classrooms and schools.

So, how should we think about attention?

Let me introduce “attention contagion”…

Invisible Peer Pressure

A research team in Canada wanted to know: can students “catch” attention from one another? How about inattention?

That is: if Student A pays attention, will that attentiveness cause Student B to pay more attention as well?

Or, if Student A seems inattentive, what happens with Student B?

To study this question, a research team led by Dr. Noah Forrin had two students — A and B — watch a 50 minute video in the same small classroom.

In this case, “Student A” was a “confederate”: that is, s/he had been trained…

to “pay attention”: that is, focus on the video and take frequent notes, or

NOT to “pay attention”: that is, slouch, take infrequent notes, glance at the clock.

Student A sat diagonally in front of Student B, visible but off to the side.

What effect did A’s behavior have on B?

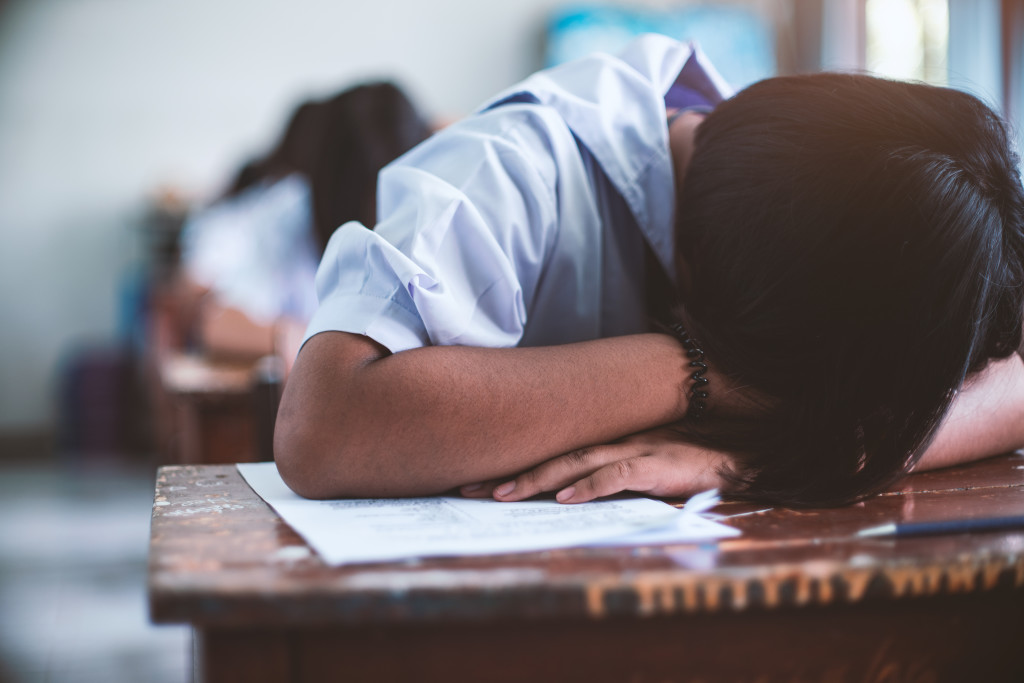

Well, when A paid attention, B

… reported focusing more,

… focused more, got less drowsy, and fidgeted less,

… took more notes, and

… remembered slightly more on a subsequent multiple-choice quiz.

These results seem all the more striking because the inattentive confederate had been trained NOT to be conspicuously distracting. NO yawning. NO fidgeting. NO pen tapping.

The confederates, in other words, didn’t focus on the video, but didn’t try to draw focus themselves. That simple lack of focus — even without conspicuous, noisy distraction — sapped Student B’s attention.

Things Get Weird

So far, this study (probably) confirms teacherly intuition. I’m not terribly suprised that one student’s lack of focus has an effect on other students. (Forrin’s research team wasn’t surprised either. They had predicted all these results, and have three different theories to explain them.)

But: what happens if Student A sits diagonally BEHIND Student B, instead of diagonally in front?

Sure enough, Forrin’s team found the same results.

Student B caught Student A’s inattention, even if s/he couldn’t see it.

I have to say: that result seems quite arresting.

Forrin and Co. suggest that Student B could hear Student A taking notes — or not taking notes. And this auditory cue served as a proxy for attentiveness more broadly.

But whatever the reason, “attention contagion” happens whether or not students can see each other. (Remember: the confederates had been trained not to be audibly distracting — no sighs, no taps, no restless jostling about.)

Classroom Implications

I wrote at the top that teachers can use research to guide our thinking. So, what should we DO when we THINK about attention contagion?

To me, this idea shifts the focus somewhat from individual students to classroom norms.

That is: in the old days, I wanted that-student-right-there to pay attention. To do so, I talked to that-there-student. (“Eyes on the board, please, Bjorn.”)

If attention contagion is a thing, I can help that-student-right-there pay attention by ensuring ALL students are paying attention.

If almost ALL of my students focus, that-student-right-there might “catch” their attentiveness and focus as well.

Doug Lemov — who initially drew my attention to this study — rightly points to Peps Mccrea’s work.

Mccrea has written substantively about the importance of classroom norms. When teachers establish focus as a classroom norm right from the beginning, this extra effort will pay off down the road.

The best strategy to do so will vary from grade to grade, culture to culture, teacher to teacher. But this way of thinking can guide us in doing in our specific classroom context.

Yes, Yes: Caveats

I should point out that the concept of “attention contagion” is quite new — and its newness means we don’t have much reasearch at all on the topic.

Forrin’s team has replicated the study with online classrooms (here) — but these are the only two studies on the topic that I know of.

And: two studies is a VERY SMALL number.

Note, too, that the research was done (for very good reasons) in a highly artificial context.

So, we have good reason to be curious about pursuing this possibility. But we should not take “attention contagion” to be a settled conclusion in educational psychology research.

TL;DR

To help our students pay attention, we can work with individual students on their behavior and focus.

And, we can emphasize classroom norms of focus — norms that might help students “catch” attention from one another.

Especially if more classroom research reinforces this practice, we can rethink attention with “contagion” in mind — and thus help our students learn.

Forrin, N. D., Huynh, A. C., Smith, A. C., Cyr, E. N., McLean, D. B., Siklos-Whillans, J., … & MacLeod, C. M. (2021). Attention spreads between students in a learning environment. Journal of Experimental Psychology: Applied, 27(2), 276.

![Overwhelmed Teachers: The Working-Memory Story (Part II) [Updated with Link]](https://www.learningandthebrain.com/blog/wp-content/uploads/2024/01/AdobeStock_328789964-1024x683.jpeg)