I recently wrote a series of posts about research into asking questions. As noted in the first part of that series, we have lots of research that points to a surprising conclusion.

Let’s say I begin class by asking students questions about the material they’re about to learn. More specifically: because the students haven’t learned this material yet, they almost certainly get the answers wrong.

Even more specifically — and more strangely — I’m actually trying to ask them questions that they won’t answer correctly.

In most circumstances, this way of starting class would sound…well…mean. Why start class by making students feel foolish?

Here’s why: we’ve got a good chunk of research showing that these questions — questions that students will almost certainly get wrong — ultimately help them learn the correct answers during class.

(To distinguish this particular category of introductory-questions-that-students-will-get-wrong, I’m going to call them “prequestions.”)

Now, from one perspective, it doesn’t really matter why prequestions help. If asking prequestions promotes learning, we should probably ask them!

From another perspective, we’d really like to know why these questions benefit students.

Here’s one possibility: maybe they help students focus. That is: if students realize that they don’t know the answer to a question, they’ll be alert to the relevant upcoming information.

Let’s check it out!

Strike That, Reverse That, Thank You

I started by exploring prequestions; but we could think about the research I’m about to describe from the perspective of mind-wandering.

If you’ve ever taught, and ESPECIALLY if you’ve ever taught online, you know that students’ thoughts often drift away from the teacher’s topic to…well…cat memes, or a recent sports upset, or some romantic turmoil.

For obvious reasons, we teachers would LOVE to be able to reduce mind-wandering. (Check out this blog post for one approach.)

Here’s one idea: perhaps prequestions could reduce mind-wandering. That is: students might have their curiosity piqued — or their sense of duty highlighted — if they see how much stuff they don’t know.

Worth investigating, no?

Questions Answered

A research team — including some real heavy hitters! — explored these questions in a recent study.

Across two experiments, they had students watch a 26-minute video on a psychology topic (“signal detection theory”).

- Some students answered “prequestions” at the beginning of the video.

- Others answered those questions sprinkled throughout the video.

- And some (the control group) solved unrelated algebra problems.

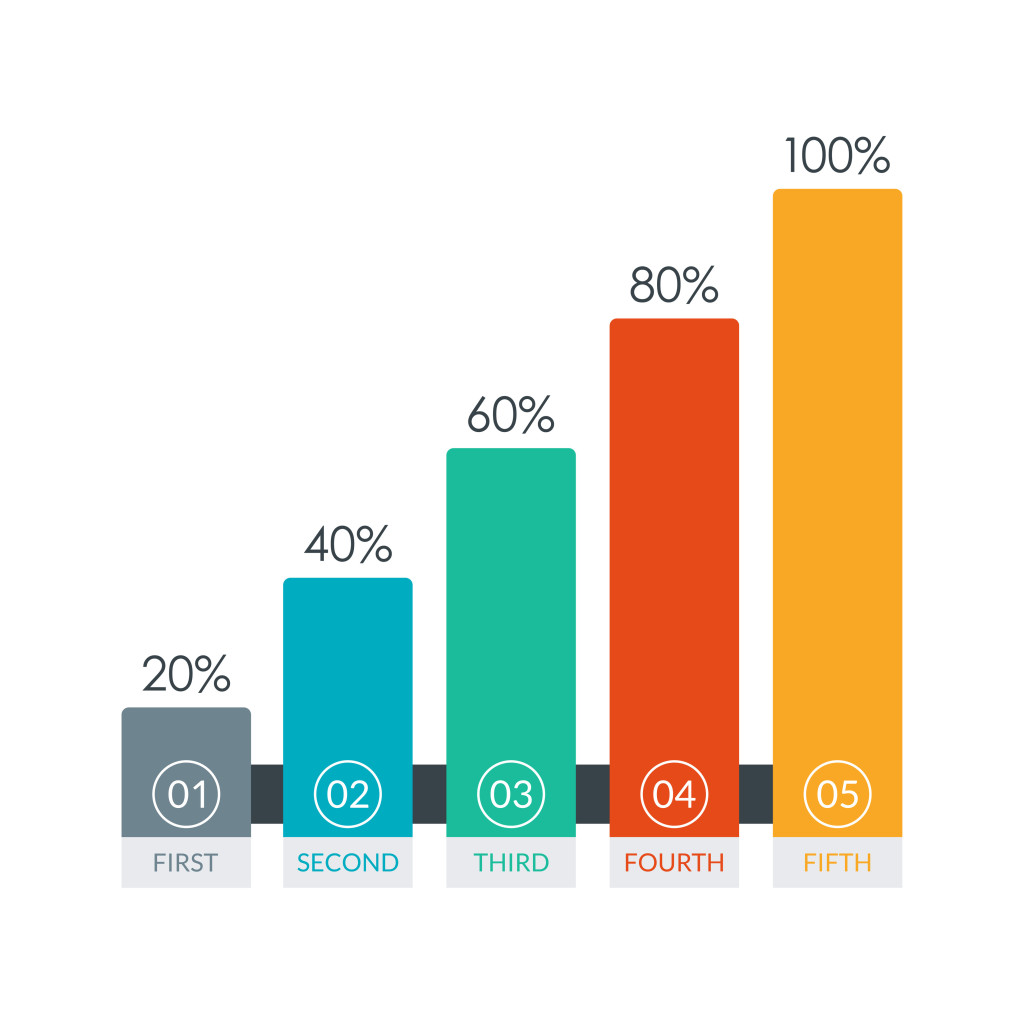

Once the researchers crunched all the numbers, they arrived at some helpful findings.

First: yes, prequestions reduced mind-wandering. More precisely, students who answered prequestions reported that they had given more of their attention to the video than those who solved the algebra problems.

Second: yes, prequestions promoted learning. Students who answered prequestions were likelier to get the answer correct on a final test after the lecture than those who didn’t.

Important note: this benefit applied ONLY to the questions that students had seen before. The researchers also asked students new questions — ones that hadn’t appeared as prequestions. The prequestion group didn’t score any higher on those new questions than the control group did.

Third: no, the timing of the questions didn’t matter. Students benefitted from prequestions asked at the beginning as much as those sprinkled throughout.

From Lab to Classroom

So, what should teachers DO with this information.

I think the conclusions are mostly straightforward.

A: The evidence pool supporting prequestions is growing. We should use them strategically.

B: This study highlights their benefts to reduce mind-wandering, especially for online classes or videos.

C: We don’t need to worry about the timing. If we want to ask all prequestions up front or jumble them throughout the class, either strategy (according to this study) gets the job done.

D: If you’re interested in specific suggestions on using and understanding prequestions, check out this blog post.

A Final Note

Research is, of course, a highly technical business. For that reason, most psychology studies make for turgid reading.

While this one certainly has its share of jargon heavy, data-laden sentences, its explanatory sections are unusually easy to read.

If you’d like to get a sense of how researchers think, check it out!

Pan, S. C., Sana, F., Schmitt, A. G., & Bjork, E. L. (2020). Pretesting reduces mind wandering and enhances learning during online lectures. Journal of Applied Research in Memory and Cognition, 9(4), 542-554.

![The Jigsaw Advantage: Should Students Puzzle It Out? [Repost]](https://www.learningandthebrain.com/blog/wp-content/uploads/2024/02/AdobeStock_190167656-1024x683.jpeg)

![Overwhelmed Teachers: The Working-Memory Story (Part II) [Updated with Link]](https://www.learningandthebrain.com/blog/wp-content/uploads/2024/01/AdobeStock_328789964-1024x683.jpeg)