Over at The Learning Scientists, Carolina Kuepper-Tetzel asks: is it better to take longhand notes? Or to annotate slides provided by the speaker? Or, perhaps, simply to listen attentively?

(Notice, by the way, that she’s not exploring the vexed question of longhand notes vs. laptop notes.)

Before we get to her answer, it’s helpful to ask a framing question: how do brain scientists approach that topic in the first place? What lenses might they use to examine it?

Lens #1: The Right Level of Difficulty

Cognitive scientists often focus on desirable difficulties.

Students might want their learning to be as easy as possible. But, we’ve got lots of research to show that easy learning doesn’t stick.

For instance: reviewing notes makes students feel good about their learning, because they recognize a great deal of what they wrote down. “I remember that! I must have learned it!”

However, that easy recognition doesn’t improve learning. Instead, self-testing is MUCH more helpful. (Check out retrievalpractice.org for a survey of this research, and lots of helpful strategies.)

Of course, we need to find the right level of difficulty. Like Goldilocks, we seek out a teaching strategy that’s neither too tough nor too easy.

In the world of note-taking, the desirable-difficulty lens offers some hypotheses.

On the one hand, taking longhand notes might require just the right level of difficulty. Students struggle — a bit, but not too much — to distinguish the key ideas from the supporting examples. They worry — but not a lot — about defining all the key terms just right.

In this case, handwritten notes will benefit learning.

On the other hand, taking longhand notes might tax students’ cognitive capacities too much. They might not be able to sort ideas from examples, or to recall definitions long enough to write them down.

In this case, handing out the slides to annotate will reduce undesirable levels of difficulty.

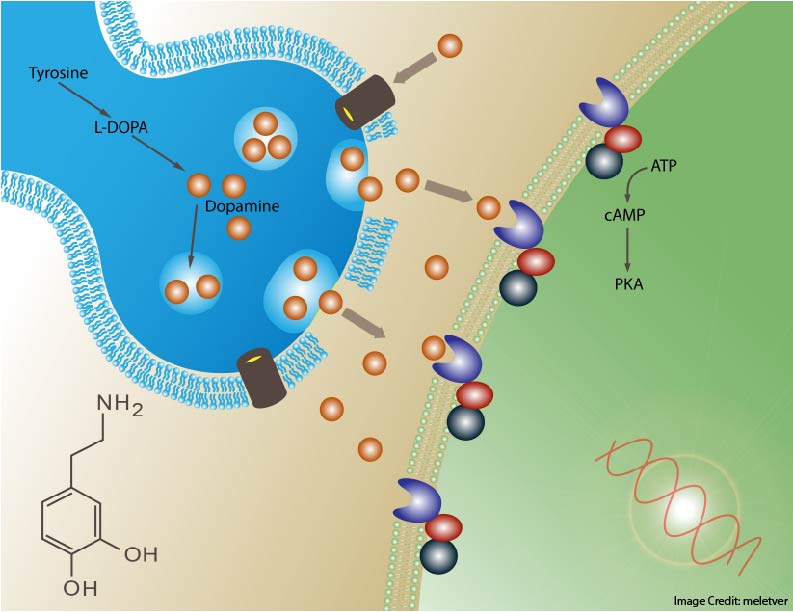

Lens #2: Working Memory Overload

Academic learning requires students to

focus on particular bits of information,

hold them in mind,

reorganize and combine them into some new mental pattern.

We’ve got a particular cognitive capacity that allows us to do that. It’s called working memory. (Here’s a recent post about WM, if you’d like a refresher.)

Alas, people need WM to learn in schools, but we don’t have very much of it. All too frequently, working memory overload prevents students from learning.

Here’s a key problem with taking longhand notes: to do so, I use my working memory to

focus on the speaker

understand her ideas

decide which ones merit writing down

reword those ideas into simpler form (because I can’t write as fast as she speaks)

write

(at the same time that I’m deciding, rewording, and writing) continue understanding the ideas in the lecture

(at the same time that I’m rewording, writing, and continuing) continue deciding what’s worth writing down.

That’s a HUGE working memory load.

Clearly, longhand notes keep a high WM load. Providing slides to annotate reduces that load.

Drum Roll, Please…

What does recent research tell us about longhand notes vs. slide annotation? Kuepper-Tetzel, summarizing a recent conference presentation, writes:

participants performed best … when they took longhand notes during the lecture compared to [annotating slides or passively listening].

More intriguing, the group who just passively viewed the lecture performed as well as the group who were given the slides and made annotations.

Whether the lecture was slow- or fast-paced did not change this result.

Longhand notetaking was always more beneficial for long-term retention of knowledge than both annotated slides and passive viewing.

By the way: in the second half of the study, researchers tested students eight weeks later. They found that longhand note-takers did as well as annotators even though they studied less.

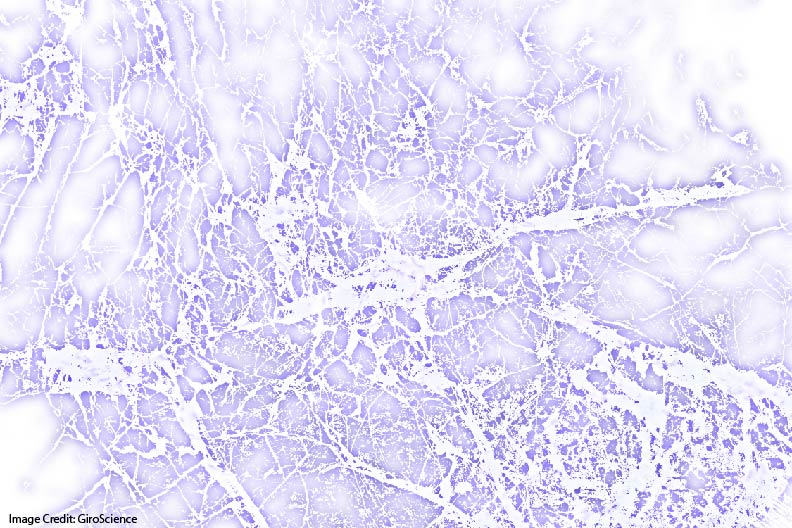

It seems that the desirable difficulty of handwriting notes yielded stronger neural networks. Those networks required less reactivation — that is, less study time — to produce equally good test results.

Keep In Mind…

Note that Kuepper-Tetzel is summarizing as-of-yet unpublished research. The peer-review process certainly has its flaws, but it also can provide some degree of confidence. So far, this research hasn’t cleared that bar.

Also note: this research used lectures with a particular level of working memory demand. Some of our students, however, fall below the average in our particular teaching context. They might need more WM support.

We might also be covering especially complicated material on a particular day. That is: the WM challenges in our classes vary from day to day. On the more challenging days, all students might need more WM support.

In these cases, slides to annotate — not longhand notes — might provide the best level of desirable difficulty.

As is always the case, use your best professional judgment as you apply psychology research in your classroom.

About Andrew Watson

About Andrew Watson