If you follow research in the world of long-term memory, you know you’ve got SO MANY GOOD STRATEGIES.

Agarwal and Bain’s Powerful Teaching, for instance, offers a delicious menu: spacing, interleaving, retrieval practice, metacognition.

Inquiring minds want to know: how do we best choose among those options? Should we do them all? Should we rely mostly on one, and then add in dashes of the other three? What’s the idea combination?

One Important Answer

Dr. Keith Lyle and his research team wanted to know: which strategy has greater long-term impact in teaching college math: retrieval practice or spacing?

That is: in the long term, do students benefit from more retrieval? From greater spacing? From both?

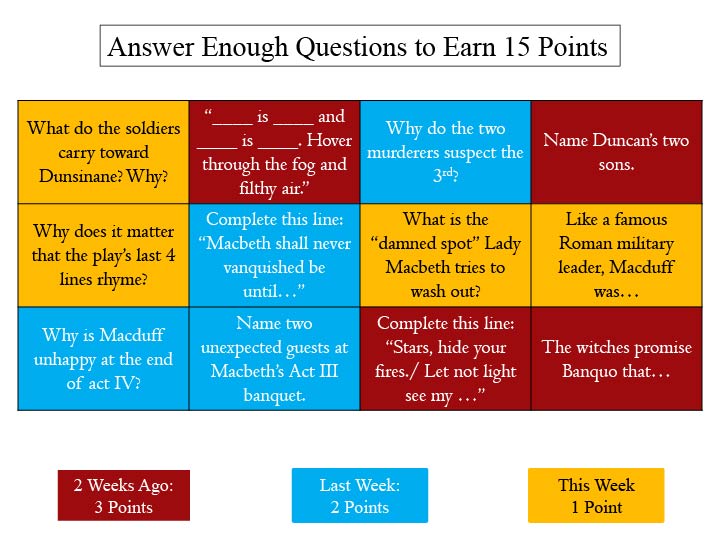

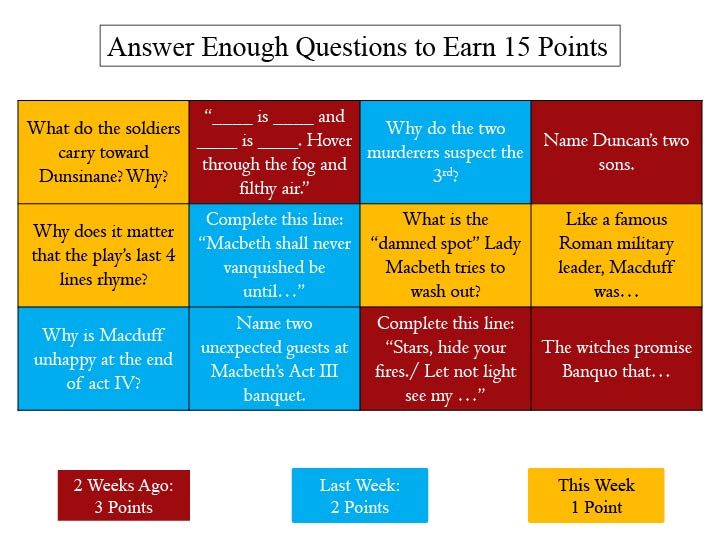

To answer this really important question, they carefully designed weekly quizzes in a college precalculus class. Some topics, at “baseline,” were tested with three questions at the end of the week. That’s a little retrieval practice, and a few days of spacing.

Some topics were tested with six quiz questions at the end of the week. That’s MORE retrieval practice, but the same baseline amount of spacing.

Some topics were tested with three quiz questions spread out over the semester. That’s baseline retrieval practice, but MUCH GREATER spacing.

And, some topics were tested with six quiz questions spread out over the semester. That’s extra retrieval AND extra spacing.

They then measured: how did these precalculus students do when tested on those topics on the final exam? And — hold on you hats — how did they do when tested a month later, when they started taking the follow-up class on calculus?

Intriguing Answers…

Lyle and Co. found that — on the precalculus final exam…

…extra retrieval practice helped (about 4% points), and

…extra spacing helped (about 4% points), and

…combining extra retrieval with extra spacing helped more (about 8% points).

So, in the relatively short term, both strategies enhance learning. And, they complement each other.

What about the relatively longer term? That is, what happened a month later, on the pre-test for the calculus class? In that case…

…extra retrieval practice didn’t matter

…extra spacing helped (about 4% points).

…combining extra retrieval with extra spacing produced no extra benefit (still about 4% points).**

For enduring learning, then, extra spacing helped, but extra retrieval practice didn’t.

…Important Considerations

First: as the researchers note, it’s important to stress that this research comes from the field of math instruction. Math — more than most disciplines — already has retrieval practice built into in.

That is: when I do math homework, every problem I solve requires me (to some degree) to recall the math task at hand. (And, probably, lots of other relevant math info as well.)

But, when I do my English homework, the paper I’m writing about Macbeth might not remind me about Grapes of Wrath. Or, when I do my History homework, the time I spend studying Aztec civilization doesn’t necessarily require me to recall facts or concepts from the Silk Road unit. (It might, but might not.)

So, this study shows that extra retrieval practice didn’t help over and above the considerable retrieval practice the math students were already doing.

Second: notice that the “spacing” in this case was a special kind of spacing. It was, in fact, spacing of retrieval practice. Of course, that counts as spacing.

But, we have lots of other ways to space as well. For instance, Dr. Rachael Blasiman testing spacing by taking time in lectures to revisit earlier concepts. That strategy did create spacing, but didn’t include retrieval practice.

So, this research doesn’t necessarily apply to other kinds of spacing. It might, but we don’t yet know.

Practical Classroom Applications

Lyle & Co.’s study gives us three helpful classroom reminders.

First: as long as we’ve done enough retrieval practice to establish ideas (as math homework does almost automatically), we can redouble our energies to focus on spacing.

Second: Lyle mentions in passing that students do (very slightly) worse on quizzes that include spacing — because spacing is harder. (Regular readers know, we call this “desirable difficulty.”)

This reminder gives us an extra reason to be sure that quizzes with spacing are low-stakes or no-stakes. We don’t want to penalize students for participating in learning strategies that benefit them.

Third: In my own view, we can ask/expect our students to join us in retrieval practice strategies. Once they reach a certain age or grade, they should be able to make flashcards, or use quizlet, or test one another.

However, I think spacing requires a different perspective on the full scope of a course. That is: it requires a teacher’s perspective. We have the long view, and see how all the pieces best fit together.

For those reasons, I think we can (and should) ask students to do retrieval practice (in addition to the retrieval practice we create). But, we ourselves should take responsibility for spacing. We — much more than they they — have the big picture in mind. We should take that task off their to do list, and keep it squarely on ours.

** This post has been revised on 3/7/30. The initial version did not include the total improvement created by retrieval practice and spacing one month after the final exam.

![Are “Retrieval Practice” and “Spacing” Equally Important? [Updated]](https://www.learningandthebrain.com/blog/wp-content/uploads/2020/02/AdobeStock_154724827.jpg)