Given the importance of feedback for learning, it seems obvious teachers should have well-established routines around its timing.

In an optimal world, would we give feedback right away? 24 hours later? As late as possible?

Which option promotes learning?

In the past, I’ve seen research distinguishing between feedback given right this second and that given once students are done with the exercise: a difference of several seconds, perhaps a minute or two.

It would, of course, be interesting to see research into longer periods of time.

Sure enough, Dan Willingham recently tweeted a link to this study, which explores exactly that question.

The Study Plan

In this research, a team led by Dr. Hillary Mullet gave feedback to college students after they finished a set of math problems. Some got that feedback when they submitted the assignment; others got it a week later.

Importantly, both groups got the same feedback.

Mullet’s team then looked at students’ scores on the final exams. More specifically, if the students got delayed feedback on “Fourier Transforms” — whatever those are — Mullet checked to see how they did on the exam questions covering Fourier.

And: they also surveyed the students to see which timing they preferred — right now vs. one week later.

The Results

I’m not surprised to learn that students strongly preferred immediate feedback. Students who got delayed feedback said they didn’t like it. And: some worried that it interfered with their learning.

Were those students’ worries correct?

Nope. In fact, just the opposite.

To pick one set of scores: students who got immediate feedback scored 83% on that section of an exam. Students who got delayed feedback scored a 94%.

Technically speaking, that’s HUGE.

Explanations and Implications

I suspect that delayed feedback benefitted these students because it effectively spread out the students’ practice.

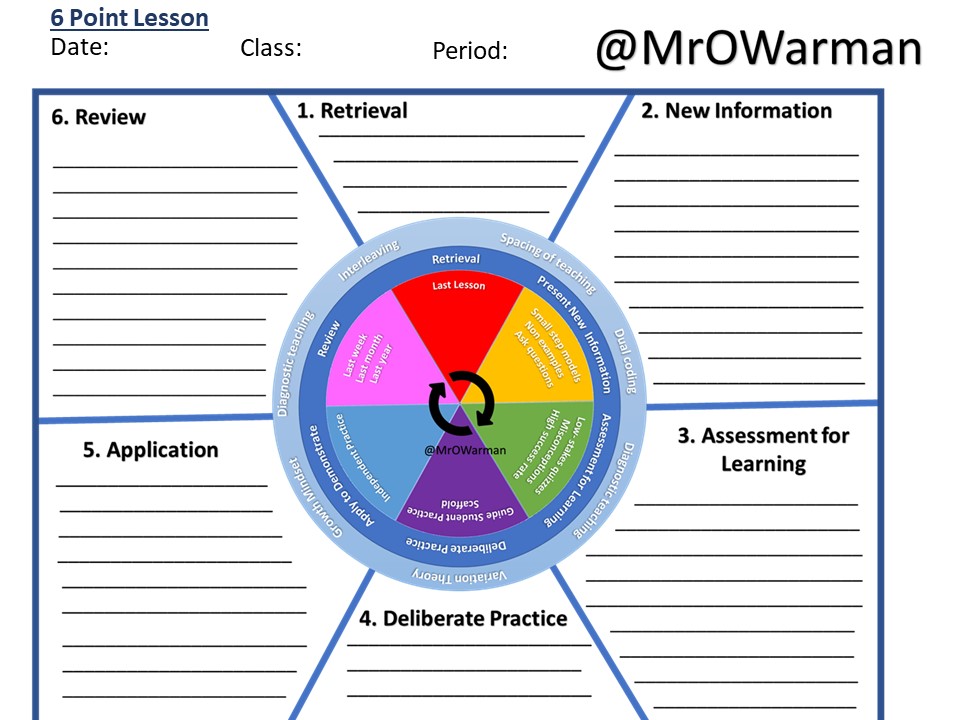

We have shed loads of research showing that spacing practice out enhances learning more than doing it all at once.

So, if students got feedback right away, they did all their Fourier thinking at the same time. They did that mental work all at once.

However, if the feedback arrived a week later, they had to think about it an additional, distinct time. They spread that mental work out more.

If that explanation is true, what should teachers do with this information? How should we apply it to our teaching?

As always: boundary conditions matter. That is, Mullet worked with college students studying — I suspect — quite distinct topics. If they got delayed feedback on Fourier Transforms, that delay didn’t interfere with their ability to practice “convolution.”

In K-12 classrooms, however, students often need feedback on yesterday’s work before they can undertake tonight’s assignment.

In that case, it seems obvious that we should get feedback to them ASAP. As a rule: we shouldn’t require new work on a topic until we’ve given them feedback on relevant prior work.

With that caveat, Mullet’s research suggests that delaying feedback as much as reasonably possible might help students learn. The definition of “reasonably” will depend on all sorts of factors: the topic we’re studying, the age of my students, the trajectory of the curriculum, and so forth.

But: if we do this right, feedback helps a) because feedback is vital, and b) because it creates the spacing effect. That double-whammy might help our students in the way it helped Mullet’s. That would be GREAT.

![Laptop Notes or Handwritten Notes? Even the New York Times Has It Wrong [Reposted]](https://www.learningandthebrain.com/blog/wp-content/uploads/2020/08/AdobeStock_176381909_Credit.jpg)