The summer stretches before you like a beach of relaxing joy. With a guilty-pleasure novel in one hand and an umbrella drink in the other, how should you best plan for the upcoming school year?

Let’s be honest:

You might want to give yourself a break. School is STRESSFUL. Some down time with your best friends — perhaps a refreshing walk in the woods — getting back into a fitness routine … all these sound like excellent ideas to me.

If, however, you’re the sort of person who reads education blogs in the summer, well, you might be looking for some ideas on refreshing your teaching life.

Since you asked…

The Essential Specifics Within the Big Picture

The good news about research-based teaching advice?

We have LOTS and LOTS of helpful suggestions!

The bad news about research-based teaching advice?

Well: we have LOTS and LOTS of helpful suggestions!! Probably too many suggestions to keep track of.

If only someone would organize all those suggestions into a handy checklist, then you might strategically choose just a few of those topics that merit your attention. If this approach sounds appealing to you, I’ve got even more good news:

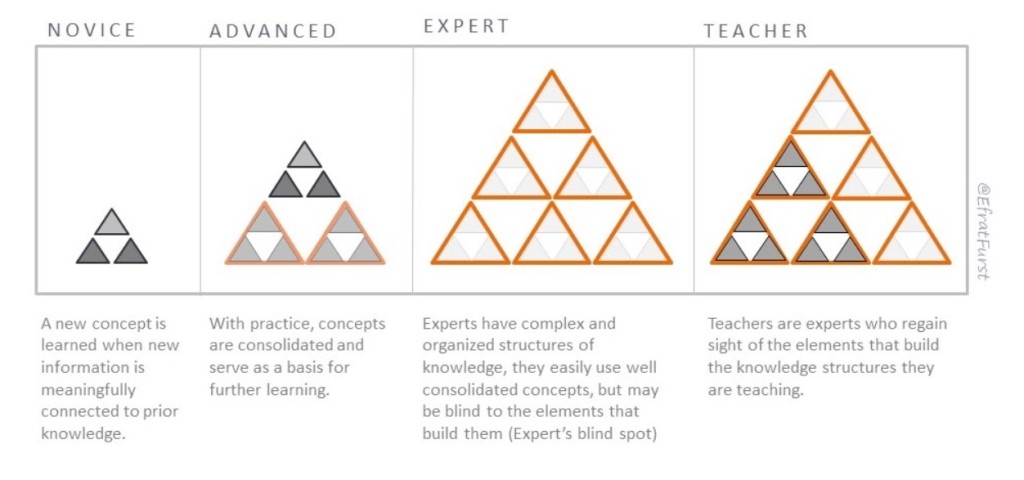

You can check out Sherrington and Caviglioli’s EXCELLENT book Walkthrus. This book digests substantial research into dozens of specific classroom topics (how to value and maintain silence; how to create a “no opt out” culture). It then offers 5-step strategies to put each one into practice.

In a similar vein, Teaching and Learning Illuminated, by Busch, Watson*, and Bogatchek, captures all sorts of teaching advice in handy visuals. Each one repays close study — in the same way you might closely study a Walkthru.

With these books, you can do a deep dive into as many — or as few — topics as you choose.

School Policy

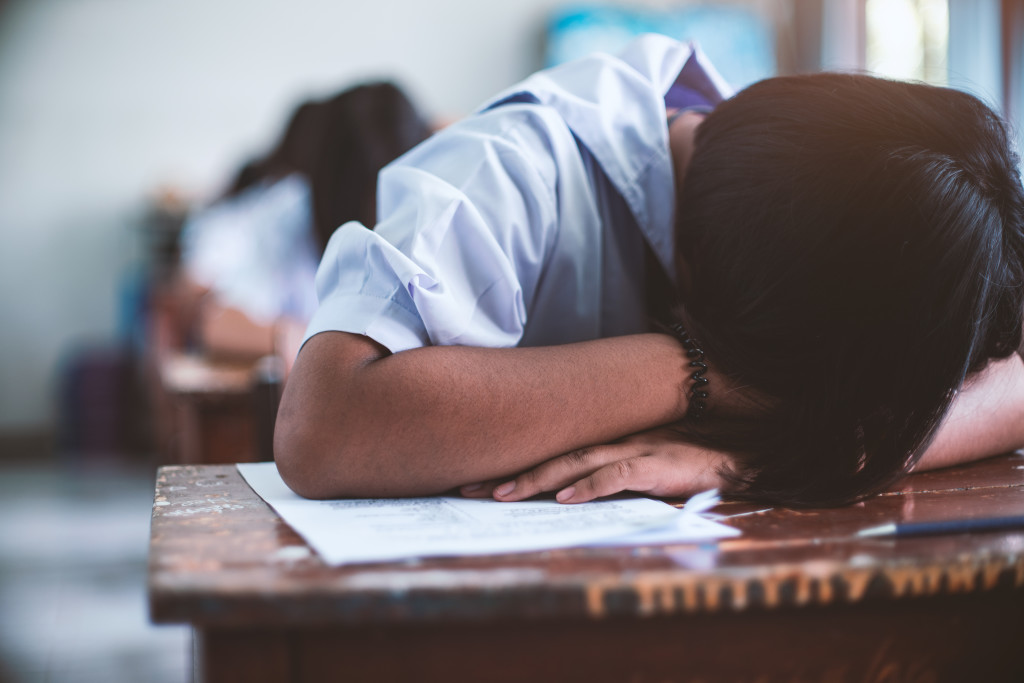

The hot topics in education policy world are a) cell phones and b) AI.

As everyone knows, Jonathan Haidt’s recent book has made a strong case for heavily restricting cell phone usage for children.

I think it’s equally important to know that LOTS of wise people worry that Haidt is misinterpreting complex data.

Schools and teachers no doubt benefit from reading up on this debate. My own view, however, is that we should focus on the effects that phones (and other kinds of technology) have in our own schools and classrooms. Create policies based on the realities you see in front of you — not abtract data about people who might (but might not) resemble your students.

As for Artificial Intelligence: I think the field is too new — and evolving too rapidly — for anyone to have a broadly useful take on the topic.

In my brief experience, AI-generated results are too often flukily wrong for me to rely on them in my own work. (Every word of this blog is written by me; it’s a 100% AI-free zone.)

Even worse: the mistakes that AI makes are often quite plausible — so you need to be a topic expert to see through them.

My wise friend Maya Bialik — one-time blogger on this site, and founder of QuestionWell AI — knows MUCH more about AI than I do. She recommends this resource list, curated by Eric Curts, for teachers who want to be in the know.

A Pod for You

I’m more a reader than a pod-er, but:

If you’re in the mood for lively podcasts, I have two recommendations:

First, the Learning Scientists routinely do an EXCELLENT job translating cognitive science reseach for classroom teachers.

Unsurprisingly, their wise podcast is still going strong after many years.

Second, Dr. Zach Groshell’s podcast — Progressively Incorrect — explores instructional coaching, math and reading instruction, current debates in education, and other essential topics.

You might start with his interview with fan favorite Dan Willingham.

(Full disclosure: I have appeared on both podcasts, and am friends with the people who run them.)

The Journey Ends at Its Beginning

But, seriously, give yourself a break. You’ve worked hard. Take the summer off. I bet you’ve got A LOT of shows to binge-watch in your queue…

* A different “Watson”: EDWARD Watson. As far as I know, we’re not related.

About Andrew Watson

About Andrew Watson