When teachers try to use psychology research in the classroom, we benefit from a balance of optimism and skepticism.

I confess, I’m often the skeptic.

When I hear that – say – “retrieval practice helps students learn,” I hope that’s true, but I want to see lots of research first.

No matter the suggestion…

… working memory training!

… dual coding!

… mindfulness!

… exercise breaks!!!

… I’m going to check the research before I get too excited. (Heck, I even wrote a book about checking the research, in case you want to do so as well.)

Here’s one surprising example.

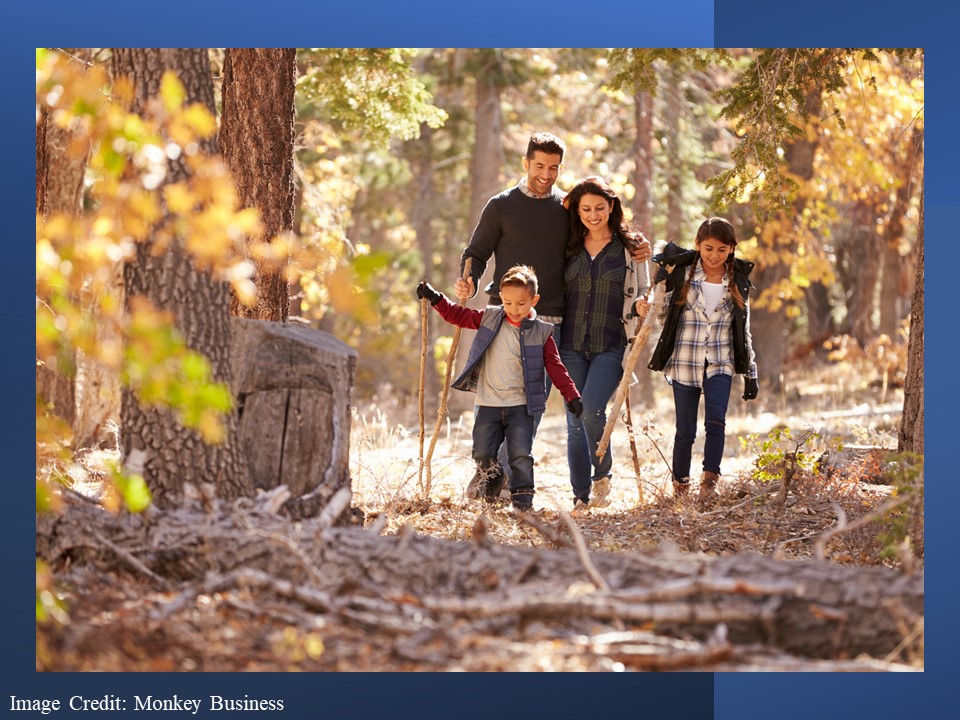

Because I really like the outdoors (summer camp, here I come!), I’d LOVE to believe that walking outside has cognitive benefits.

When I get all skeptical and check out the research…it turns out that walking outside DOES have cognitive benefits.

As I wrote back in May, we’ve got enough good research to persuade me, at least for now, that walking outdoors helps with cognition.

Could anything be better?

Yup, Even Better

Yes, reader, I’ve got even better news.

The research mentioned above suggests that walking restores depleted levels of both working memory and attention.

“Yes,” I hear you ask, “but we’ve got other important mental functions. What about creativity? What does the research show?”

I’ve recently found research that looks at that very question.

Alas, studying creativity creates important research difficulties.

How do you define “creativity”?

How do you measure it?

This research, done by Oppezzo and Schwartz, defines it thus: “the production of appropriate novelty…which may be subsequently refined.”

That is: if I can come up with something both new and useful, I’ve been creative – even if my new/useful thing isn’t yet perfect.

Researchers have long used a fun test for this kind of creativity: the “alternative uses” test.

That is: researchers name an everyday object, and ask the participants to come up with alternative uses for it.

For example, one participant in this study was given the prompt “button.” For alternative uses, s/he came up with…

“as a doorknob for a dollhouse, an eye for a doll, a tiny strainer, to drop behind you to keep your path.”

So much creativity!

Once these researchers had a definition and a way to measure, what did they find?

The research; the results

This research team started simple.

Participants – students in local colleges – sat for a while, then took a creativity test. Then they walked for a while, and took second version of that test.

Sure enough, students scored higher on creativity after they walked than after they sat.

How much higher? I’m glad you asked: almost 60% higher! That’s a really big boost for such a simple change.

However, you might see a problem. Maybe students did better on the 2nd test (after the walking) because they had had a chance to practice (after the sitting)?

Oppezzo and Schwartz spotted this problem, and ran three more studies to confirm their results.

So, they had some students sit then walk, while others walked then sat.

Results? Walking still helps.

In another study, they had some students walk or sit indoors, and walk or sit outdoors.

Results: walking promotes creativity both indoors and out.

Basically, they tried to find evidence against the hypothesis that walking boosts creativity…and they just couldn’t do it. (That’s my favorite kind of study.)

Just One Study?

Long-time readers know what’s coming next.

We teachers should never change our practice based on just one study – even if that study includes 4 different experiments.

So, what happens when we look for more research on the topic?

I’ve checked out my go-to sources: scite.ai and connectedpapers.com. (If you like geeking out about research, give them a try – they’re great!)

Sure enough, scite.ai finds 13 studies that support this conclusion, and 3 that might contradict it. (In my experience, that’s a good ratio.)

Connectedpapers.com produces fewer on-point results. However, the most recent study seems like a very close replication, and arrived at similar findings.

In brief: although I’m usually a skeptic, I’m largely persuaded.

TL;DR

Walking outdoors helps restore working memory and attention; walking either indoors or outdoors enhances creativity (at least as measured by the “alternative uses” test).

I’d love to see some studies done in schools and classrooms. For the time being, I think we have a persuasive foundation for this possible conclusion.

Our strategies for putting this research to good use will, of course, be different for each of us. But it’s good to know: simply walking about can help students think more creatively.

Oppezzo, M., & Schwartz, D. L. (2014). Give your ideas some legs: the positive effect of walking on creative thinking. Journal of experimental psychology: learning, memory, and cognition, 40(4), 1142.

About Andrew Watson

About Andrew Watson