I collaborated on this post with Dr. Cindy Nebel. Her bio appears below.

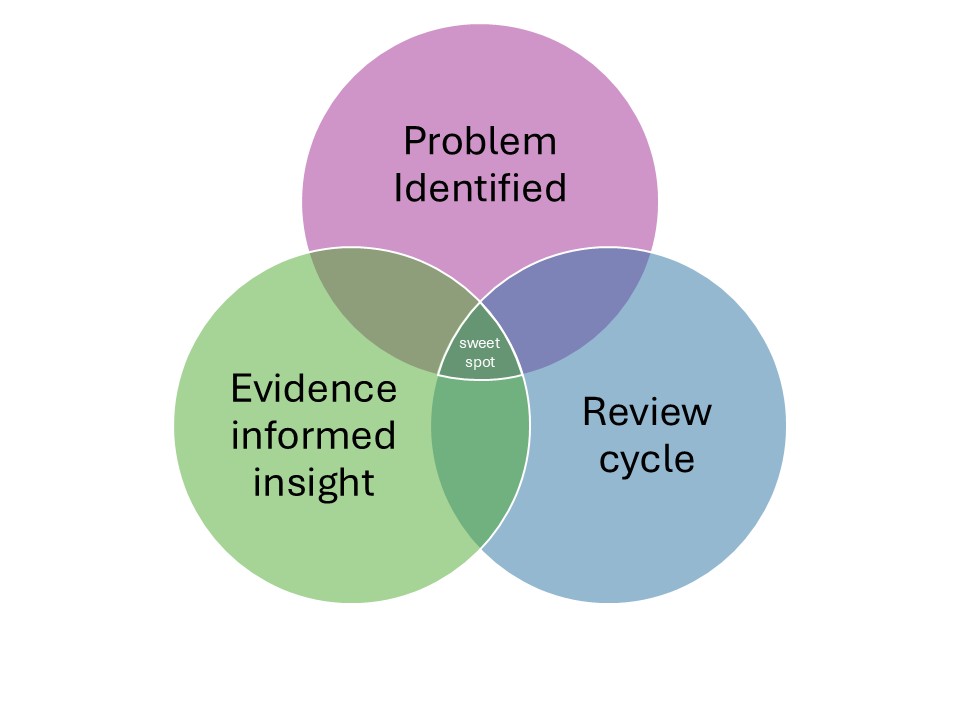

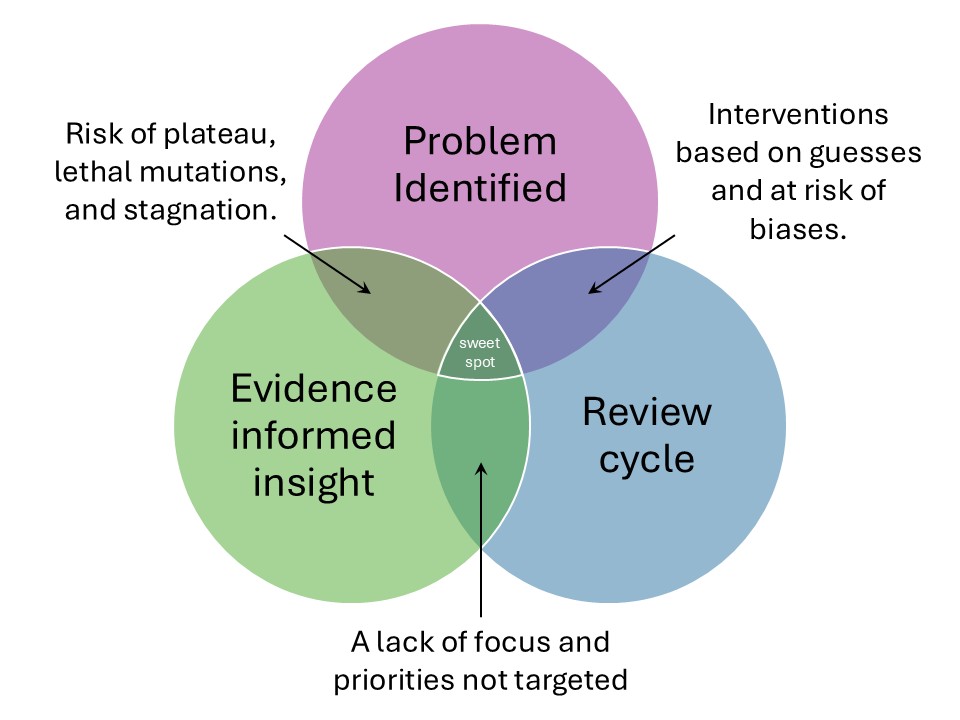

Everyone in this field agrees: we should begin our work with SKEPTICISM. When someone comes to us with a shiny new “research-informed” teaching suggestion, we should be grateful…and be cautious as well. After all:

The “someone” who gave us the “research-based” suggestion might…

- Misunderstand the research; it’s easy to do!

- Have found an outlier study; researchers rarely agree on complex subjects — like teaching and learning.

- Work in a context that differs from ours, and so offer a suggestion that helps their students but not other students.

- Misrepresent the research. Yup, that happens.

And so forth.

For all these reasons, we have to kick the tires when we’re told to change what we do because of research.

Easy Cases, Hard Cases

At times, this advice feels easy to follow. Ask any speaker at a Learning and the Brain conference, and they will assure you that:

- Learning Styles are not a thing;

- Left Brain/Right Brain distinctions don’t really matter;

- The Learning Pyramid (“students remember 5% of what they hear…”) is self-contradicting, and based on no research whatsoever;

- The list goes on…

Friends describe these ideas as “zombie beliefs”: no matter how many times we “kill them off” with quality research, they rise from the dead.

As we attack these zombie beliefs with our evidence stakes, we always chant “remember: you have to be SKEPTICAL!”

These cases are easy.

Alas, we often face hard cases. In my experience, those hard cases often combine two key elements:

- First: we already believe — and/or WANT to believe — the “research-based” claim; and

- Second: the research is neuroscience.

If a new neuro-study confirms a belief I already hold, my ability to be skeptical deserts me completely. I accept the research as obviously true — and obviously beyond criticism. I find myself tweeting: “Only a fool could disagree with this claim, which is now settled…”

Of course, if I fail to be skeptical in these hard cases, I’m abandoning scientific principles just as surely as people who purport to teach left-brained thinkers.

One example: in my experience, people REALLY want to believe that handwritten notes foster learning more surely than notes taken on a laptop. (I have detailed the flaws in this claim many times.)

A study published in 2023 is regularly used to support this “handwriting > laptop” claim. I first read about it in an article entitled “Handwriting promotes memory and learning.”

Notice that this study fits the pattern above:

- First: people already believe — and really WANT to believe — the claim.

- Second: it’s neuroscience.

LOTS of thoughtful people held this study up to champion handwritten notes.

Alas, because this study is a hard case, the skepticism practices that we typically advocate fell to the side. As it turns out, the flaws in this study are easy to spot.

- It’s based on a very small sample;

- The laptop note-takers had to type in a really, really unnatural way;

- The study didn’t measure how much the students remembered and learned.

No, I’m not making that last one up. People used a study to make claims about memory and learning even though the researchers DIDN’T MEASURE memory and learning.

In other words: in this hard case, even the most basic kinds of skepticism failed us — and by “us” I mean “people who spend lots of time encouraging folks to be skeptical.”

Today’s Hard Case

The most recent example of this pattern irrupted on eX/Twitter last week. An EEG study showed that students who used ChatGPT

a) remembered less of what they “wrote,” and

b) experienced an enduring reduction of important kinds of brain-wave activity.

Here’s a sentence from the abstract that captures that second point precisely:

“The use of LLM had a measurable impact on participants, and while the benefits were initially apparent, as we demonstrated over the course of 4 months, the LLM group’s participants performed worse than their counterparts in the Brain-only group at all levels: neural, linguistic, scoring.”

Once again, this study fits into the hard-case pattern:

- Confirms a prior belief (for LOTS of people), and

- Neuroscience

The unsurprisingly surprising result: this study has been enthusiastically championed as the final word on the harms of using AI in education. And some of that enthusiastic championing comes from my colleagues on Team Skepticism.

I want to propose a few very basic reasons to hesitate before embracing this “AI harms brains” conclusion:

- First: the PDF of this study clocks in at 206 pages. To evaluate a study of that length with a critical eye would take hours and hours and hours. Unless I have, in fact, spent all those hours critically evaluating a study, I should not rush to embrace its conclusions.

- Second: I’m going to be honest here. Even if I spent hours and hours, I’m simply not qualified to evaluate this neuroscience study. Not many people are. Neuroscience is such an intricately technical field that very few folks have the savvy to double- and triple-check its claims.

For the same reason you should not fly in a jet because I’ve assured you it’s airworthy, you should not trust a neuro study because I’ve vetted it. I can’t give a meaningful seal of approval — relatively few people can.

Knowing my own limitations here, I reached out to an actual neuroscientist: Dr. Cindy Nebel*. Here are her thoughts:

Here are my takeaways from this study:

- Doing two different tasks requires different brain areas.

In this study, participants were explicitly told to write using AI or on their own. Unsurprisingly, you use a different part of your brain when you are generating your own ideas than if you are looking up content and possibly copying and pasting it. In this study, participants were explicitly encouraged to use AI to write their essay, so it’s likely they did — in fact — just copy and paste much of it.

2. When you think back on what you did using different brain areas, you use those same differentiated brain areas again.

When we remember an event from our lives, we actually reactivate the neural network associated with that event. So, let’s say I’m eating an apple while reading a blog post. My neural areas associated with taste, vision, and language will all be used. When I recall this event later, those same areas will be activated. In this study, the people who didn’t use their brains much when they were copy/pasting still didn’t use their brains much when they recalled their copy/pasting. This finding is entirely unsurprising and says nothing about getting “dumber”.

3. It’s harder to quote someone else than it is to quote yourself.

The only learning and memory effect in this study showed that individuals who copied and pasted had a harder time quoting their essays immediately after writing them than those who generated the ideas themselves. Shocking, right?

My neuroscience-informed conclusion from this study is that not using your brain results in less neural activation. [*insert sarcastic jazz hands here*.]

To be clear: I did not spend the requisite hours and hours reading the 206 page article. I did scan all 206 pages, read the methods thoroughly, and took a close look at the memory results in particular. I skipped the bulk of the paper which is actually a linguistic analysis of the type of language used in prompts and essays written with and without the support of AI. I am very much not an expert in this area and, very importantly, this seemed to make up the most important findings.

Back to you, Andrew.

Customary Caveats

This post might be misunderstood to say: “this study is WRONG; teachers SHOULD use AI with their students.”

I’m not making either of those claims. Instead, I am saying:

- Like all studies, this study should be evaluated critically and skeptically before we embrace it. Because it’s so complicated, few people have the chops to confirm its findings. (And not many have time to review 206 pages.)

- As for the use of AI in schools, I think the topic resists blanket statements. Probably the best shorthand — as is so often the case — goes back to Dan Willingham’s famous sentence:

“Memory is the residue of thought.”

If we want students to learn something (“memory”), they have to THINK about it. And if they’re using ChatGPT, they’re thinking about — say — high-quality prompts. They’re probably NOT thinking about the content of the essay, or effective essay-writing strategies.

Because we want students to think, we should – in almost all cases – encourage them to write without AI.

(To be clear: I think we could easily create assignments that cause students to think with AI. For instance: they could ask Claude to write a bad essay about The Great Gatsby: one that’s ill organized, ungrammatical, and interpretively askew. They could then correct that essay. VOILA: an AI assignment that results in thinking.)

Ironic Coda

I wrote this blog post based on my own thinking and understanding. I then shared my thoughts with Dr. Nebel, who offered her substantial commentary.

Next — as is my recent habit – I asked Claude to proofread this post, and to make any suggestions for clarity and logical flow. Based on its suggestions, I made a few changes.

In other words: this post has an inherent bias in it.

If I trust Claude — an AI assistant — I’m probably biased against research showing that AI assistants create enduring mental decrements. Although I doubt that this bias has misled me too far, I do think you should know that it exists.

* Dr. Nebel notes: “To the neuroscientists in the audience, Andrew is using that term generously. My degree in Brain, Behavior, and Cognition yes, involved neuroscience courses including a human brain dissection and yes, involved courses and research using fMRI. But I am not a neuroscientist in the strictest sense. I do, however, understand neuroscience better than the average bear.”

Dr. Cynthia Nebel is the Director of Learning Services and Associate Professor of Psychiatry and Behavioral Neuroscience at St. Louis University School of Medicine. She holds a Ph.D. in Brain, Behavior, and Cognition and has held faculty positions at Lindenwood, Washburn, and Vanderbilt Universities. Dr. Nebel has published two influential books on the science of learning and is a leading collaborator with The Learning Scientists, an organization focused on bridging the gap between learning research and educational practice. Dr. Nebel has presented the science of learning nationally and internationally and is dedicated to bridging research and practice to improve educational and organizational outcomes.

Van der Weel, F. R., & Van der Meer, A. L. (2024). Handwriting but not typewriting leads to widespread brain connectivity: a high-density EEG study with implications for the classroom. Frontiers in psychology, 14, 1219945.

Kosmyna, N., Hauptmann, E., Yuan, Y. T., Situ, J., Liao, X. H., Beresnitzky, A. V., … & Maes, P. (2025). Your brain on chatgpt: Accumulation of cognitive debt when using an ai assistant for essay writing task. arXiv preprint arXiv:2506.08872.

About Andrew Watson

About Andrew Watson