Here at Learning and the Brain, we want teachers and students to benefit from research. Obviously.

When psychologists discover important findings about the mind, when neuroscientists investigate the function of the brain, schools might well benefit.

Let’s start making connections!

At the same time, that hopeful vision requires care and caution. For instance, research (typically) operates in very specialized conditions: conditions that don’t really match most classrooms.

How can we accomplish our goal (applying research to the classroom) without making terrible mistakes (mis-applying research to the classroom)?

A Case in Point

Today’s post has been inspired by this study, by researchers Angela Brunstein, Shawn Betts, and John R. Anderson.

It’s compelling title: “Practice Enables Successful Learning under Minimal Guidance.”

Now, few debates in education generate as much heat as this one.

Many teachers think that — because we’re the experts in the room, and because working memory is so small — teachers should explain ideas carefully and structure practice incrementally.

Let’s call this approach “high-structure pedagogy” (although it’s probably better known as “direct instruction”).

Other teachers think that — because learners must create knowledge in order to understand and remember it — teachers should stand back and leave room for adventure, error, discovery, and ultimate understanding.

Let’s call this approach “low-structure pedagogy” (although it has LOTS of other names: “constructivism,” “project/problem-based learning,” “minimal guidance learning,” and so forth).

How can we apply the Brunstein study to this debate? What do we DO with its conclusions in our classrooms?

If you’re on the low structure team, you may assume the study provides the final word in this debate. What could be clearer? “Practice enables successful learning under minimal guidance” — research says so!

If you’re on the high structure team, you may assume it is obviously flawed, and look to reject its foolish conclusions.

Let me offer some other suggestions…

Early Steps

In everyday speech, the word “bias” has a bad reputation. In the world of science, however, we use the word slightly differently.

We all have biases; that is, we all have perspectives and opinions and experiences. Our goal is not so much to get rid of biases, but to recognize them — and recognize the ways they might distort our perceptions.

So, a good early step in applying research to our work: fess up to our own prior beliefs.

Many (most?) teachers do have an opinion in this high-structure vs. low-structure debate. Many have emphatic opinions. We should acknowledge our opinions frankly. (I’ll tell you my own opinion at the end of this post.)

Having taken this first vital step, let it shape your approach to the research. Specifically, try — at least temporarily — to convince yourself to change your mind.

That is: if you believe in low-structure pedagogy, look hard for the flaws in this study that seems to champion low-structure pedagogy. (BTW: all studies have flaws.)

If your biases tend you to high-structure pedagogy, try to find this study’s strengths.

Swim against your own tide.

Why? Because you will read the study more carefully — and therefore will likely arrive at conclusions that benefit your students more.

Gathering Momentum

Now that you have a goal — “change my own mind” — look at the study to answer two questions:

First: who was in the study?

Second: what, exactly, did they do?

You should probably be more persuaded by studies where…

First: …the study’s participants resemble your students and your cultural context, and

Second: …the participants did something that sensibly resembles your own possible teaching practice.

So, in this case: the participants were undergraduates at Carnegie Mellon University.

If you teach undergraduates at a highly selective university — the Google tells me that CMU currently admits 14% of their applicants — then this study’s conclusions might help you.

However, if you teach 3rd graders, or if you teach at any school with open admission, those conclusions just might not offer useful guidance.

After all, high-powered college students might succeed at “minimal guidance” learning because they already know a lot, and because they’re really good at school. (How do we know? Because they got into CMU.)

What about our second question? What exactly did the participants do?

In this study, participants used a computer tutor to solve algebra-ish math problems. (The description here gets VERY technical; you can think of the problems a proto-Kendoku, with algebra.)

What about the guidance they got? How “minimal” was it?

Getting the Definition Just Right

At this point, Brunstein’s study reminds us of an essential point.

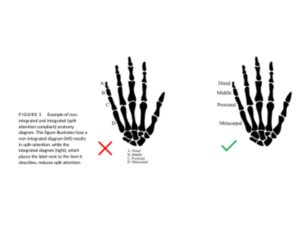

When teachers talk about educational practice, we use handy shorthand phrases to capture big ideas.

Metacognition. Mindfulness. Problem-based learning.

However, each of those words and phrases could be used to describe widely different practices.

Before we can know if this study about “minimal guidance” applies to our students, we have to know exactly what these researchers did that they’re calling minimal guidance.

Team Brunstein says exactly this. They see discovery learning and direct instruction not as two different things, but as ends of a continuum:

“No learning experience is pure: students given direct instruction often find themselves struggling to discover what the teacher means, and all discovery situations involve some minimal amount of guidance.”

In this case, “minimal guidance” involved varying degrees of verbal and written instructions.

This study concludes that under very specific circumstances, a particular blend of structure and discovery fosters learning.

So, yes, in some “minimal guidance” circumstances, students learned — and practice time helped.

However — and this is a big “however”:

In one part of the study, 50% of the students at the extreme “discovery” end of the spectrum quit the study. Another 25% of them went so slowly that they didn’t finish the assignment.

In other words: this study in no way suggests that all kinds of minimal guidance/discovery/PBL learning are always a good idea.

The “just right” blend helped: perhaps we can recreate that blend. But the wrong blend — “extreme discovery” — brought learning to a standstill.

Final Thoughts

First: when using research to shape classroom practice, it helps to look at specific studies.

AND it helps to look at groups of studies.

Long-time readers know that I really like both scite.ai and connectedpapers.com. If you go to those websites and put in the name of Brunstein’s study, you’ll see what MANY other scholars have found when they looked at the same specific question about minimal guidance. (Try it — you’ll like it!)

Second: I promised to tell you my own opinion about the low- vs. high-structure debate. My answer is: I think it’s the wrong question.

Because of working memory limitations, I do think that teachers should provide high structure during early stages of studying a topic.

And, for a variety of reasons, I think we should gradually transition to lower-structure pedagogies as students learn more and more.

That is:

We should use high-structure pedagogy with novices, who are early in schema formation.

And, we should use low-structure pedagogy with experts, who are later in the process of schema formation.

The question is not “which pedagogy to use?”

The better question is: “how can we identify stages along the process of students’ schema development, so we know when and how to transition our teaching.”

Research into that question is still very much in the early phases.

Brunstein, A., Betts, S., & Anderson, J. R. (2009). Practice enables successful learning under minimal guidance. Journal of Educational Psychology, 101(4), 790.

About Andrew Watson

About Andrew Watson

![The Bruce Willis Method: Catching Up Post-Covid [Reposted]](https://www.learningandthebrain.com/blog/wp-content/uploads/2022/06/Boy-at-Track-Start.jpg)

![Do Classroom Decorations Distract Students? A Story in 4 Parts… [Reposted]](https://www.learningandthebrain.com/blog/wp-content/uploads/2022/03/Busy-Classroom.jpg)

![Is “Cell Phone Addiction” Really a Thing? [Reposted]](https://www.learningandthebrain.com/blog/wp-content/uploads/2021/11/Black-College-Student-Holding-Phone.jpg)