Let’s imagine that I — a 10th grade classroom teacher — want to motivate my students. I discover this list of motivational suggestions:

- listen to students

- give them time for independent work

- provide time for students to speak

- acknowledge students’ improvement

- encourage their effort

- offer them hints when they’re stuck

- respond to their comments and questions

- acknowledge students’ perspectives

Even better, I’m told that this list has RESEARCH behind it.

Before we dive in and adopt these suggestions, let’s pause for just a moment. Please look over that list and ask yourself: “if I were a student, would I feel more academically motivated if my teacher did any or all of those things?”

[I’m pausing here so you can review the list.]Welcome back. If you like these suggestions, I’ve got some good news for you:

- This list DOES have research behind it, and

- That research, in turn, has a substantial theoretical perspective behind it.

For a few decades now, Richard Deci and Edward Ryan have developed self-determination theory: a theory of academic motivation that gets LOTS of love. The headlines sound like this:

“If we want to improve our students’ academic motivation, we should be sure that they feel

- AUTONOMY,

- RELATEDNESS, and

- COMPETENCE

in the classroom.”

Those three feelings — again: autonomy, relatedness, and competence — foster all sorts of good human outcomes, including academic motivation.

I have even better news. Unlike almost all psychology theories, self-determination theory uses these words in their everyday meaning. I don’t need to offer several paragraphs of translation to describe what Deci and Ryan mean by “autonomy.” They mean EXACTLY what you think they mean.

(This news might not sound like a big deal. But — trust me — in the world of cognitive psychology, few scholars offer clear terminology.)

I’ve described my three-word summary as “the headlines” of Deci and Ryan’s theory. But my blog title says that three words aren’t enough. What happens when we go beyond those three words?

Not So Fast

We should start by admitting that LOTS of people who talk about self-determination theory don’t get past these headlines. You’ll read SDT summaries that list and define those words, and then conclude with uplifting advice: “teachers — just go do that!”

Alas, I think even the headlines themselves raise pressing questions.

For instance: to my ear, “autonomy” and “relatedness” suggest two contrasting vibes. The words don’t exactly contradict one another — they’re not antonyms. But it’s easy to imagine a teaching strategy that INCREASES one but REDUCES the other.

For instance, let’s look at point #6 on the motivational list above: “offer struggling students a hint when they’re stuck.”

- On the one hand, that advice could certainly foster a sense of relatedness in the classroom.

- A student might think: “I was struggling, and the teacher noticed and helped me. This is a great class!” This student didn’t precisely use the word “relatedness,” but that vibe is in the air.

- At the same time, offering hints might lessen another student’s sense of autonomy.

- This student might think: “Does this guy think I’m completely helpless? I would have gotten it if he just left me alone. Sheesh.” So much for feelings of autonomy.

Let’s throw “competence” into this mix. If I offer a struggling student a hint, she might think:

- “Oh, wow — I can solve this problem now! I feel so happy an successful!” She exudes an aura of joyful competence. Or

- “Oh, wow — my teacher thinks I’m so hopeless that he has to offer me the answer on a platter. I must be the biggest loser in this class.” Her feelings of competence have clearly drained away.

Yes, you will hear “autonomy! relatedness!! competence!!!” offered as a formula to enhance student motivation. But I don’t think this formula — or ANY formula — works simply as a formula.

Reading the Fine Print

SDT’s emphasis on autonomy, relatedness, and comptence DOES provide an excellent place to start our pedagogical thinking. And, we need to keep going.

Each of the eight strategies listed above has been researched as a way to enhance student autonomy. Before we use any of them, however, I think we should stop and ask reasonable questions:

- What are the potential conflicts here? Will this strategy enhance one of the Big Three, but harm another?

- What are the individual differences here? Will this student respond to the strategy by feeling more competent, while that student responds by feeling more foolish?

- What are the cultural differences here? Will students in — say — Korea find a particular autonomy strategy confounding while their counterparts in — say — Brazil find that same strategy encouraging? (To be clear: I’m being entirely speculative here. I don’t know enough about either of those cultures to even attempt an example.)

I’ll offer one more example, simply to emphasize the concerns that trouble me.

Strategy #2 says that we should “give students time for independent work.”

- On the one hand, what could possibly be more foundational to teaching. OF COURSE students need time to work on their own.

- As a motivational benefit, all this independent work might make them feel autonomous and competent.

- On the other hand, students who lack appropriate prior knowledge could be overwhelmed and demotivated by all that time to work alone. What should they be doing? How do they do it?

- Motivationally, they have no partner to rely on (so much for “relatedness”), and feel their own lack of skill all too forcibly (good bye “competence”).

In other words: if I worry that my students lack motivation, I shouldn’t simply look at that list and pick one that sounds uplifting and research-y. “Oh, yes, I’ll give them independent work time — they’ll feel more motivated!”

Instead, I should look at the list and ask myself those follow-up questions. In brief, does this uplifting and research-y teaching strategy fit my students’ current educational and motivational needs? Have I considered both upsides and downsides?

In fact: these concerns about self-determination theory point to a broader challenge that teachers face when trying to implement research-backed strategies.

The Bigger Picture

In writing this post, I am using self-determination theory as an example of a larger problem. Research and researchers certainly can — and should!! — offer classroom teachers practical guidance.

And: we should always filter that guidance with friendly-but-persistent questions:

- How good is this research? How many studies arrive at roughly the same conclusion?

- Will this guidance benefit MY students (not just someone’s students)?

- Does the benefit over here create a problem over there?

- Does the cost — in money and in time — outweigh the potential benefit?

And so forth.

In brief: let’s use research to inform our practice. And: let’s also commit to being educators who dig deeper and ask tougher questions. The best teaching happens not when we follow scripts and formulas, but when we think carefully about the unique circumstances and students in our classrooms.

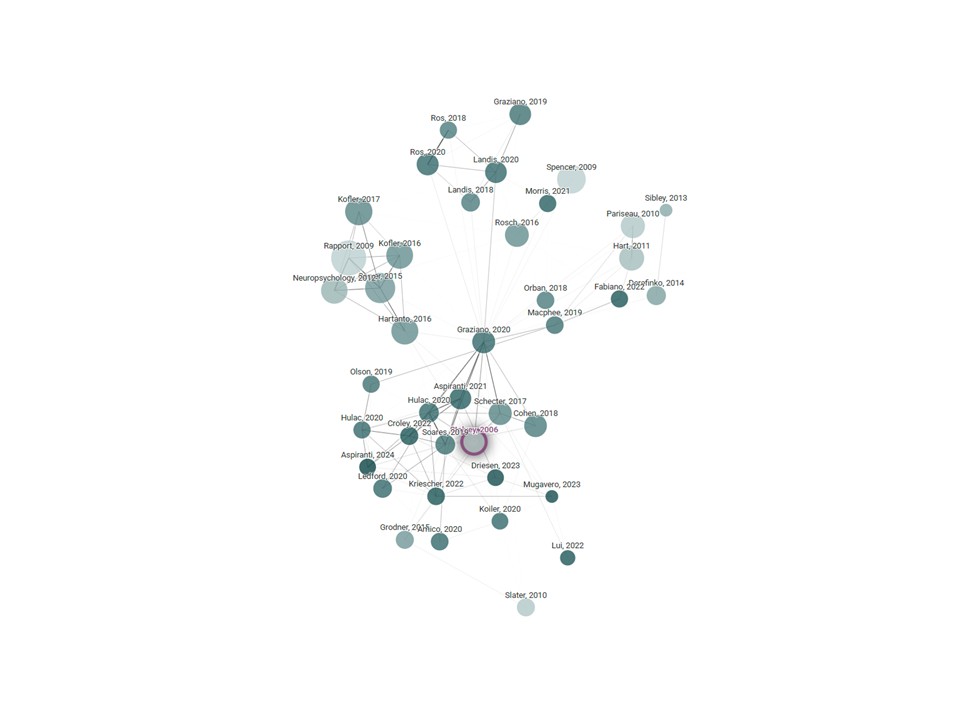

Reeve, J., & Jang, H. (2006). What teachers say and do to support students’ autonomy during a learning activity. Journal of educational psychology, 98(1), 209.

Ryan, R. M., & Deci, E. L. (2020). Intrinsic and extrinsic motivation from a self-determination theory perspective: Definitions, theory, practices, and future directions. Contemporary educational psychology, 61, 101860.