This post got a LOT of attention when our blogger first wrote it back in February:

The “jigsaw” method sounds really appealing, doesn’t it?

Imagine that I’m teaching a complex topic: say, the digestive system.

Asking students to understand all those pieces — pancreas here, stomach there, liver yon — might get overwhelming quickly.

So, I could break that big picture down into smaller pieces: puzzle pieces, even. And, I assign different pieces to subgroups of students.

Group A studies the liver.

Group B, they’ve got the small intestine.

Group C focuses on the duodenum.

Once each group understands its organ — its “piece of the puzzle” — they can explain it to their peers. That is: they re-assemble the larger puzzle from the small, understandable bits.

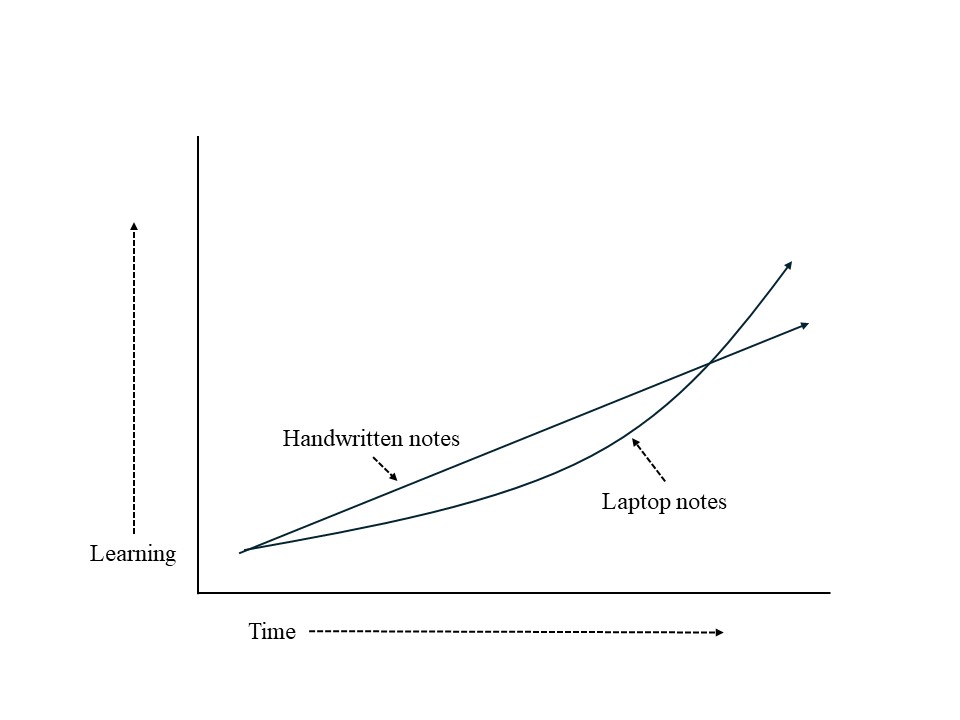

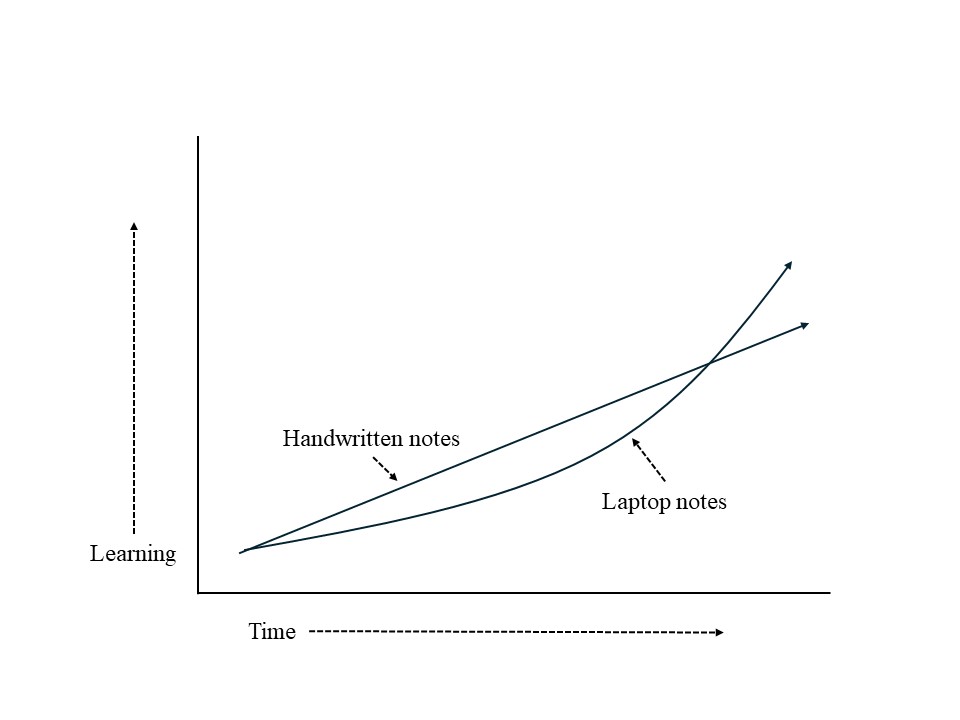

This strategy has at least two potential advantages:

First, by breaking the task down into smaller steps, it reduces working memory load. (Blog readers know that I’m a BIG advocate for managing working memory load.)

Second, by inviting students to work together, it potentially increases engagement.

Sadly, both those advantages have potential downsides.

First: the jigsaw method could reduce working memory demands initially. But: it also increases working memory demands in other ways:

… students must figure out their organ themselves, and

… they have to explain their organ (that’s really complicated!), and

… they have to understand other students’ explanations of several other organs!

Second: “engagement” is a notoriously squishy term. It sounds good — who can object to “engagement”? — but how do we define or measure it?

After all, it’s entirely possible that students are “engaged” in the process of teaching one another, but that doesn’t mean they’re helpfully focused on understanding the core ideas I want them to learn.

They could be engaged in, say, making their presentation as funny as possible — as a way of flirting with that student right there. (Can you tell I teach high school?)

In other words: it’s easy to spot ways that the jigsaw method could help students learn, or could interfere with their learning.

If only we had research on the subject…

Research on the Subject

A good friend of mine recently sent me a meta-analysis puporting to answer this question. (This blog post, in fact, springs from his email.)

It seems that this meta-analysis looks at 37 studies and finds that — YUP — jigsaw teaching helps students learn.

I’m always happy to get a research-based answer…and I always check out the research.

In this case, that “research-based” claim falls apart almost immediately.

The meta-analysis crunches the results of several studies, and claims that jigsaw teaching has a HUGE effect. (Stats people: it claims a Cohen’s d of 1.20 — that’s ENORMOUS.)

You’ve probably heard Carl Sagan’s rule that “extraordinary claims require extraordinary evidence.” What evidence does this meta-analysis use to make its extraordinary claim?

Well:

… it doesn’t look at 37 studies, but at SIX (plus five student dissertations), and

… it’s published in a journal that doesn’t focus on education or psychology research, and

… as far as I can tell, the text of the meta-analysis isn’t available online — a very rare limitation.

For that reason, we know nothing about the included studies.

Do they include a control condition?

Were they studying 4th graders or college students?

Were they looking at science or history or chess?

We just don’t know.

So, unless I can find a copy of this meta-analysis online (I looked!), I don’t think we can accept it as extraordinary evidence of its extraordinary claim.

Next Steps

Of course, just because this meta-analysis bonked doesn’t mean we have no evidence at all. Let’s keep looking!

I next went to my go-to source: elicit.com. I asked it to look for research answering this question:

Does “jigsaw” teaching help K-12 students learn?

The results weren’t promising.

Several studies focus on college and graduate school. I’m glad to have that information, but college and graduate students…

… already know a great deal,

… are especially committed to education,

… and have higher degrees of cognitive self-control than younger students.

So, they’re not the most persuasive source of information for K-12 teachers.

One study from the Phillipines showed that, yes, students who used the jigsaw method did learn. But it didn’t have a control condition, so we don’t know if they would have learned more doing something else.

After all, it’s hardly a shocking claim to say “the students studied something, and they learned something.” We want to know which teaching strategy helps them learn the most!

Still others report that “the jigsaw method works” because “students reported higher levels of engagement.”

Again, it’s good that they did so. But unless they learned more, the “self-reports of higher engagement” argument doesn’t carry much weight.

Recent News

Elicit.com did point me to a highly relevant and useful study, published in 2022.

This study focused on 6th graders — so, it’s probably more relevant to K-12 teachers.

It also included control conditions — so we can ask “is jigsaw teaching more effective than something else?” (Rather than the almost useless question: “did students in a jigsaw classroom know more afterwards than they did before?” I mean: of course they did…)

This study, in fact, encompases five separate experiments. For that reason, it’s much too complex to summarize in detail. But the headlines are:

The study begins with a helpful summary of the research so far. (Tl;dr : lots of contradictory findings!)

The researchers worked carefully to provide appropriate control conditions.

They tried different approaches to jigsaw teaching — and different control conditions — to reduce the possibility that they’re getting flukey results.

It has all the signs of a study where the researchers earnestly try to doubt and double-check their own findings.

Their conclusions? How much extra learning did the jigsaw method produce?

Exactly none.

Over the course of five experiments (some of which lasted an entire school term), students in the jigsaw method group learned ever-so-slightly-more, or ever-so-slightly-less, than their control group peers.

The whole process averaged out to no difference in learning whatsoever.

The Last Word?

So, does this recent study finish the debate? Should we cancel all our jigsaw plans?

Based on my reading of this research, I do NOT think you have to stop jigsawing — or, for that matter — start jigsawing. Here’s why:

First: we’ve got research on both sides of the question. Some studies show that it benefits learning; others don’t. I don’t want to get all bossy based on such a contradictory research picture.

Second: I suspect that further research will help us use this technique more effectively.

That is: jigsaw learning probably helps these students learn this material at this point in the learning process. But it doesn’t help other students in other circumstances.

When we know more about those boundary conditions, we will know if and when to jigsaw with our students.

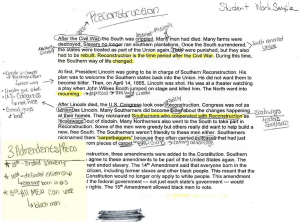

I myself suspect that we need to focus on a key, under-discussed step in the process: when and how the teacher ensures that each subgroup understands their topic correctly before they “explain” it to the next group. If they misunderstand their topic, after all, they won’t explain it correctly!

Third: let’s assume that this recent study is correct; jigsaw teaching results in no extra learning. Note, however, that it doesn’t result in LESS learning — according to these results, it’s exactly the same.

For that reason, we can focus on the other potential benefits of jigsaw learning. If it DOES help students learn how to cooperate, or foster motivation — and it DOESN’T reduce their learning — then it’s a net benefit.

In sum:

If you’re aware of the potential pitfalls of the jigsaw method (working memory overload, distraction, misunderstanding) and you have plans to overcome them, and

If you really like its potential other benefits (cooperation, motivation),

then you can make an informed decision about using this technique well.

At the same time, I certainly don’t think we have enough research to make jigsaw teaching a requirement.

As far as I know, we just don’t have a clear research picture on how to do it well.

By the way, after he wrote this post, our blogger then FOUND the missing online meta-analysis. His discussion of that discovery is here.

Stanczak, A., Darnon, C., Robert, A., Demolliens, M., Sanrey, C., Bressoux, P., … & Butera, F. (2022). Do jigsaw classrooms improve learning outcomes? Five experiments and an internal meta-analysis. Journal of Educational Psychology, 114(6), 1461.

![The Jigsaw Advantage: Should Students Puzzle It Out? [Repost]](https://www.learningandthebrain.com/blog/wp-content/uploads/2024/02/AdobeStock_190167656-1024x683.jpeg)