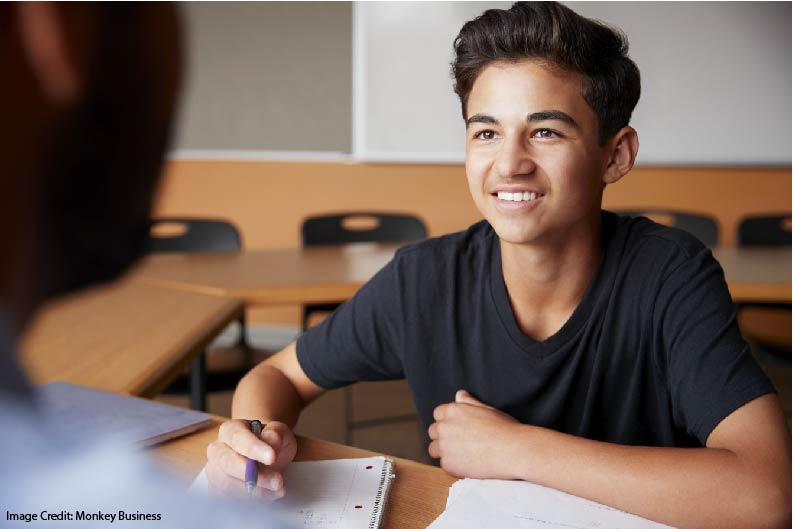

Should teachers welcome students to the classroom with elaborate individual handshakes?

Or — in these COVIDian days of ours — with elaborate dances? (If you’re on Twitter, you can check out @thedopeeducator’s post from March 17 of 2021 for an example.)

This question generates a surprising amount of heat. Around here that heat means: let’s look for research!

What Does “The Research” Say?

Truthfully, I can’t find much research on this question. Teachers have strong feelings on the subject, but the topic hasn’t gotten much scholarly attention.

The exception to this rule: Dr. Clayton Cook’s study on “Positive Greetings at the Door” from 2018.

As I described this study back in 2019, researchers trained teachers in a two-step process:

First: greet each student positively at the door: “Good morning, Dan — great hat!”

Second: offer “precorretive” reminders: “We’re starting with our flashcards, so be sure to take them out right away.”

The researchers trained five teachers (in sixth, seventh, and eighth grades) in these strategies.

Their results — compared to an “active” control group — were encouraging:

For the control group, time on task was in the mid-to-high 50%, while disruptive behaviors took place about 15% of the time.

For the positive greeting group, researchers saw big changes.

Time on task went from the high-50% to more than 80% of the time.

Disruptive behaviors fell from ~15% to less than 5% of the time.

All that from positive greetings.

A Clear Winner?

Handshake advocates might be tempted to read this study and declare victory. However, we have many good reasons to move more deliberately.

First: although handshakes are a kind of “positive greeting,” they’re not the only “positive greeting.” Researchers didn’t specify handshakes; they certainly didn’t require elaborate dances.

So, we can’t use this research to insist on either of those approaches. Teachers’ greetings should be specific and positive, but needn’t be handshake-y or dance-y.

Second: the “positive greetings” strategy requires an addition step — “precorrective guidance.” Once the handshake/greeting is complete, the teacher should offer specific directions about the next appropriate step…

… start the exercise on the board,

… take out your notebook and write the date,

… remember the definitions of yesterday’s key words.

Handshakes alone don’t match this research strategy. We need to do more to get these results.

Third: this research took place in a very specific context. Researchers asked principals to nominate classes that had seen higher-than-average levels of disruption.

That is: if your class is already well behaved, you might not see much of a change. (Of course, if your class is already well behaved, you don’t really need much of a change.)

And One More Thing (Well, TWO More Things)

I think Dr. Cook’s study helpful, clear, and well-done. However, as far as I know, it’s one of a kind. His research hasn’t been replicated (or, for that matter, contradicted). According to both Scite.ai and ConnectedPapers.com, this one study is everything we know from a research perspective.

In brief: the little research we have is encouraging. But: it doesn’t require elaborate choreography. It does require “precorrective guidance.” And, as Daniel Willingham says: “One study is just one study, folks.”

A final thought:

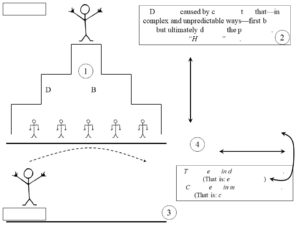

I suspect that “handshakes at the door” generate so much controversy because they’re a proxy for a wider battle of extremes.

Extreme #1: “If teachers put all our energy into forming relationships, students will inevitably learn more!”

Extreme #2: “That’s obviously dreadful nonsense.”

That is: “handshakes at the door” stand in for “relationships-first” teaching. Hence all the passion on Twitter.

This battle, I think, largely sacrifices sensible nuance to passionate belief.

On the one hand: of course, students (on average) learn more when they feel a sense of safety, respect, and connection. Some students (especially those who have experienced trauma) might struggle to learn without those things.

And, on the other hand: of course students can learn from teachers they don’t really like, and from teachers with whom they have no real connection. Lecture-based college courses depend on that model completely. So do military academies.

Handshakes at the door might help us connect with students if they feel comfortable and fun for us. But: plenty of individual teachers would feel awkward doing such a thing. Many school or cultural contexts would make such handshakes seem weird or silly or discomforting.

If such handshakes strengthen relationships, they might be a useful tool. If your relationships are already quite good, or if you’d feel foolish doing such a thing, or if your cultural context looks askance at such rituals, you can enhance relationships in other ways.

As is so often the case, we don’t need to get pulled onto a team — championing our side and decrying the other. We can, instead, check out available research, see how its conclusions apply to our context, and understand that optimal teaching practices might vary from place to place.

![Prior Knowledge: Building the Right Floor [Updated]](https://www.learningandthebrain.com/blog/wp-content/uploads/2019/10/AdobeStock_227486358_Credit-1024x631.jpg)