Last year at this time, I summarized an ENORMOUS meta-analysis about retrieval practice.

The reassuring headlines:

Retrieval practice helps students of all ages in all disciplines.

Feedback after RP helps, but isn’t necessary to get the benefits.

The mode — online, clickers, pen and paper — doesn’t matter.

The meta also includes some useful limitations:

“Brain Dumps” help less than other kinds of RP.

Sadly, retrieval practice might make it harder for students to recall un-retrieved material.

So, researchers have kicked these tires A LOT. We know retrieval practice works, and we know how to avoid its (relatively infrequent) pitfalls.

What more could research tell us?

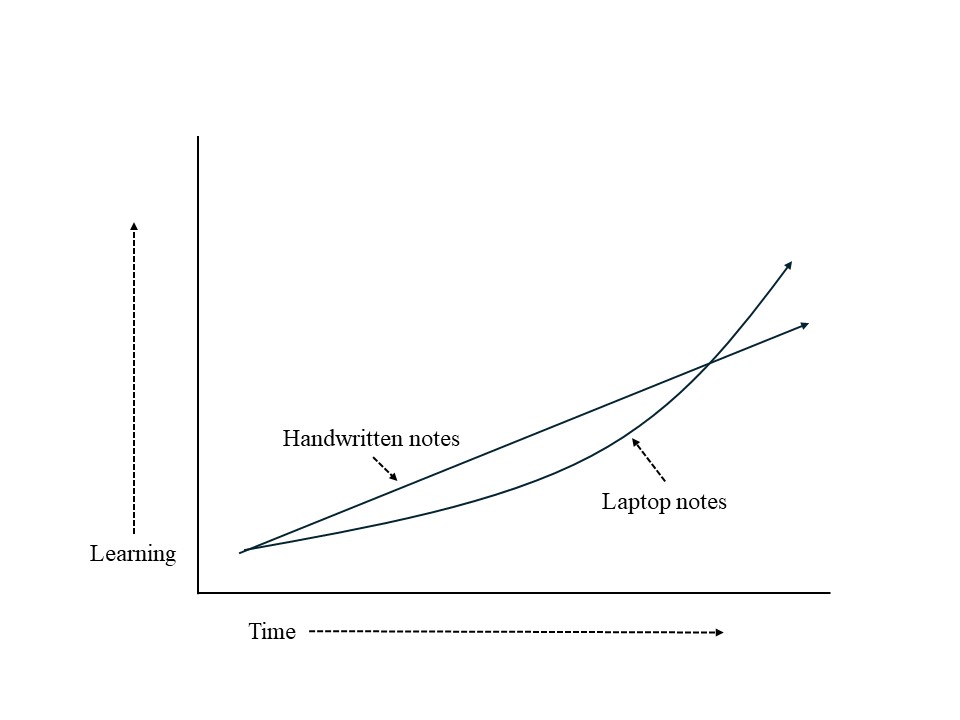

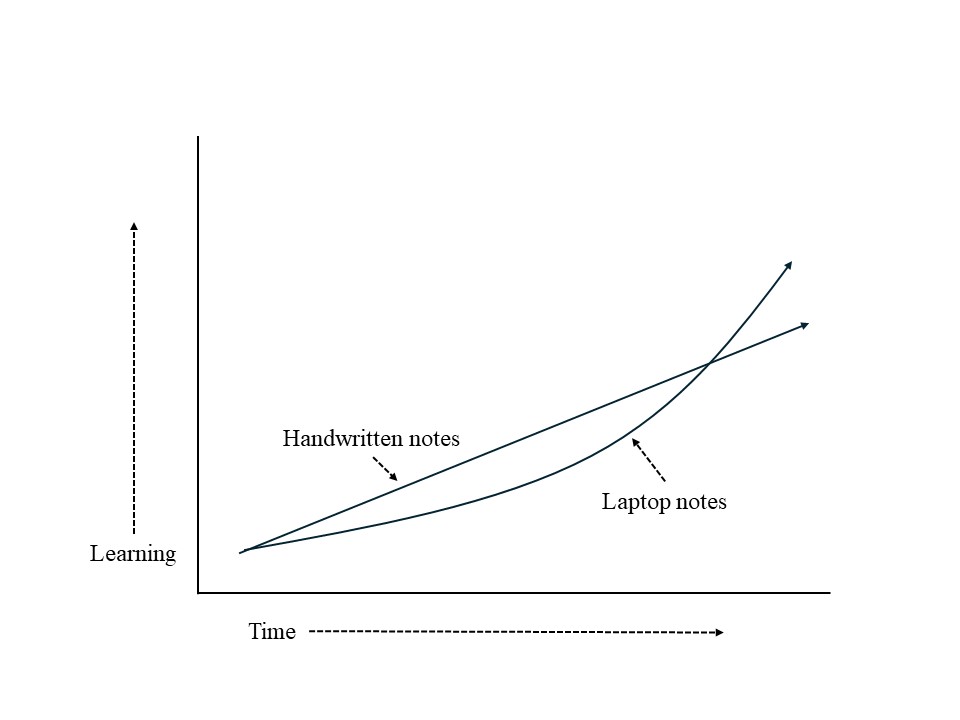

From “Lab” to “Classroom”

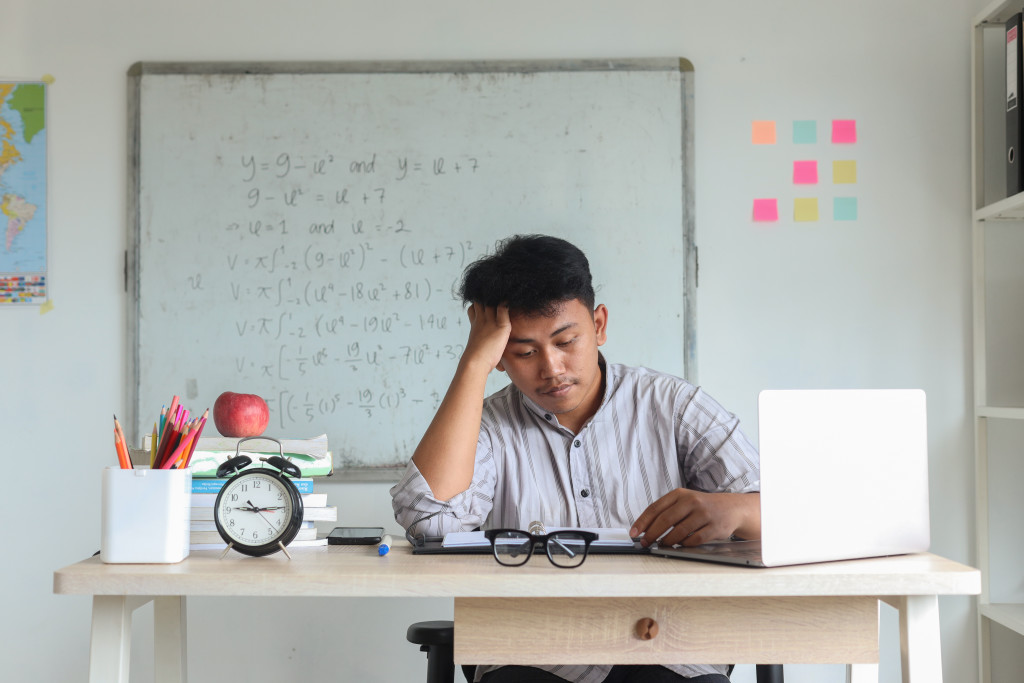

Psychology researchers typically start studying cognitive functions — like “memory” or “attention” — by doing experiments in their psychology labs, usually on college campuses.

These labs, of course, control circumstances very carfully to “isolate the variable.”

But let’s be honest, classrooms aren’t labs. Teachers don’t isolate variables; teachers combine variables.

So we’d love to know: what happens to retrieval practice when we move it outside of the psych lab into the classroom?

One recent survey study by Bates and Shea, tries to answer this question.

In their research, Bates and Shea sent out a survey to teachers in English K-12 schools to find out what is happening “in the wild.”

Do teachers use retrieval practice?

If yes, how often?

When?

What kind of retrieval practice exercise do they prefer?

What do they do with the results of RP?

And so forth.

Once again, this study brings us LOTS of good news.

First: teachers — or, at least the teachers who responded to this survey — use retrieval practice a lot.

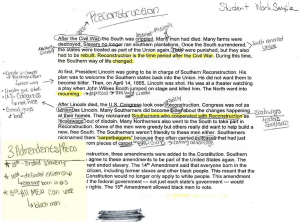

Second: they use a variety of retrieval practice strategies — short quizzes, do nows, even (less frequently) “brain dumps.”

Third: teachers use retrieval practice at different times during class: some at the beginning, some at the end, others throughout the lesson.

In other words: retrieval practice hasn’t simply turned into a precise set of rigid instructions: “you must do five mintues of retrieval practice by asking multiple choice questions at the beginning of every other class.” Instead, it’s a teachnique that teachers use as they see fit in their work.

Better and Better

For me, some of the best news from this survey comes from a surprising finding — well, “surprising” to me at least.

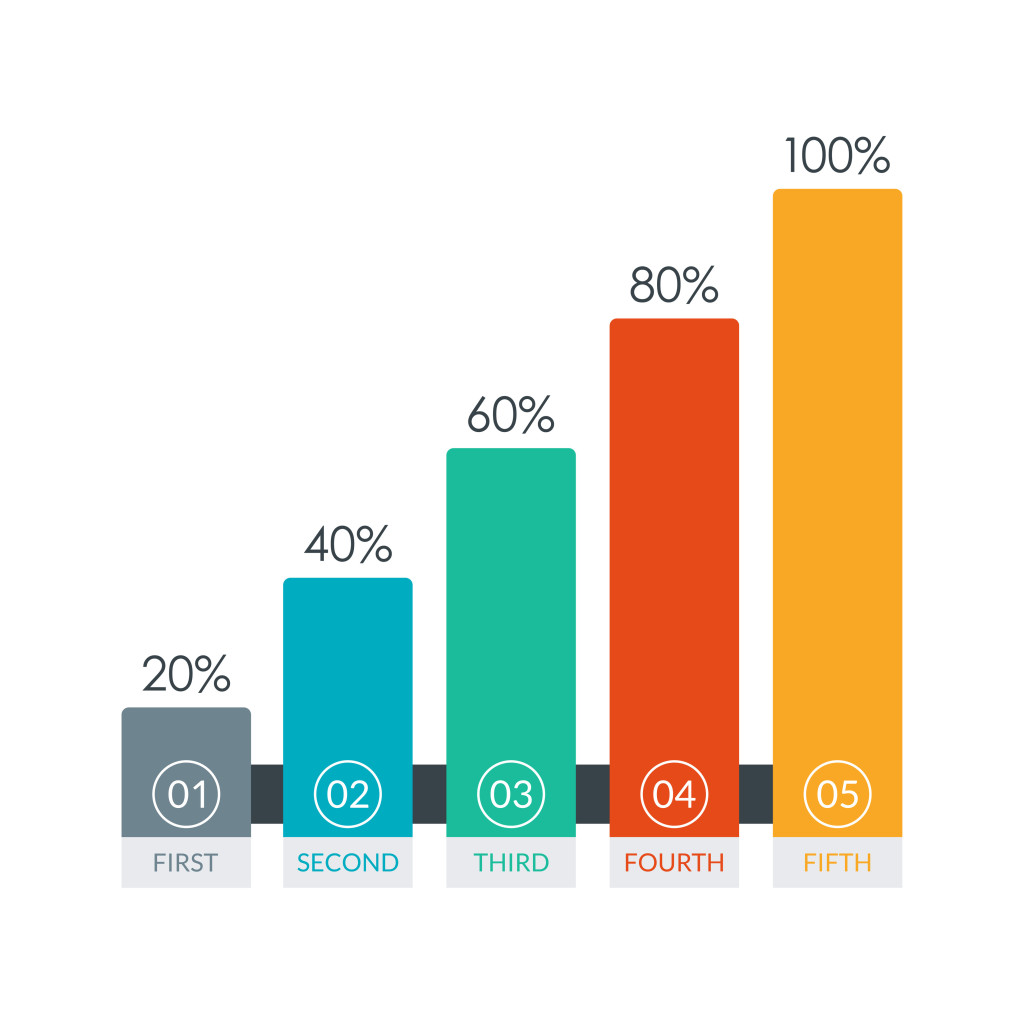

Where did teachers learn about retrieval practice?

Fully 84% learned about RP from their colleagues; 63% from internal staff training; 57% from books. Relatively few — just 20% — heard about it from training outside of school.

You might think that — as someone who blogs for a conference organization — I would want teachers to hear about RP from us.

And, of course, I’m delighted when teachers attend our conferences and hear about all the research on retrieval practice.

But the Bates and Shea data suggest that retrieval practice has in fact escaped the bounds of conference breakout rooms and really is living out there “in the wild.” Teachers hear about it not only from scholars and PowerPoint slides, but from one another.

This development strikes me as enormously good news. After all: I didn’t hear much of anything about RP when I got my graduate degree in 2012. A mere 12 years later, it’s now common knowledge even outside academia.

An Intriguing Question

One finding in the Bates and Shea study raised an interesting set of questions for me: what should teachers do after retrieval practice? In particular, what should teachers do when students get RP questions wrong?

We do have research to guide us here.

We know that students benefit when we correct their incorrect RP answers.

We also know that they learn more from RP than from simple review — even if they don’t get corrective feedback.

So, what do teachers “in the wild” actually do?

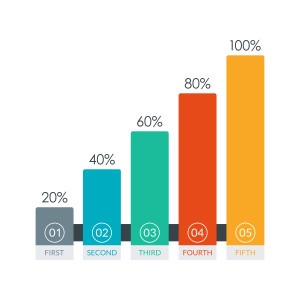

Some — 46% — reteach the lesson.

Some — 15% — give corrective feedback.

Some — 10% — use this information to shape homework assignments.

Of course, some teachers choose more than one of these strategies — or others as well (e.g.: use RP answers to guide small group formation).

At present, I don’t know that we have good research-based guidance on which strategy to use when. To me, these numbers suggest that teachers are responding flexibly to the specific circumstances that they face: minute by minute, class by class.

If you read this blog regularly, you know my mantra: “Don’t just do this thing; instead, think this way.”

If I’m reading this survey study correctly, teachers have

a) heard about retrieval practice from colleagues and school leaders,

b) adapted it to their classroom circumstances in a variety of ways, and

c) respond to RP struggles with an equally flexible variey.

No doubt we can fine tune some of these responses along the way, but these headlines strike me as immensely encouraging.

Bates, G., & Shea, J. (2024). Retrieval Practice “in the Wild”: Teachers’ Reported Use of Retrieval Practice in the Classroom. Mind, Brain, and Education.

About Andrew Watson

About Andrew Watson

![Summer Plans: How Best to Use the Next Few Weeks [Repost]](https://www.learningandthebrain.com/blog/wp-content/uploads/2024/06/AdobeStock_497101353-1024x688.jpeg)

![The Jigsaw Advantage: Should Students Puzzle It Out? [Repost]](https://www.learningandthebrain.com/blog/wp-content/uploads/2024/02/AdobeStock_190167656-1024x683.jpeg)