In the exaggerated stereotype of an obsessively traditional classroom, students sit perfectly silent and perfectly still. They listen, and watch, and do nothing else.

Few classrooms truly function that way.

But, how far should we go in the other direction? Can teachers — and should teachers — encourage noise and movement to help students learn?

In recent years, the field of embodied cognition has explored the ways that we think with our bodies.

That is: movement itself might help students learn.

Of course, this general observation needs to be explored and understood in very specific ways. Otherwise, we might get carried away. (About a year ago, for instance, one teacher inspired a Twitter explosion by having his students read while pedaling exercycles. I’ve spent some time looking at research on this topic, and concluded … we just don’t know if this strategy will help or not.)

So, let’s get specific.

Moving Triangles

An Australian research team worked with 60 ten- and eleven-year olds learning about triangles. (These students studied in the intermediate math track; they attended a private high school, with higher-than-usual SES. These “boundary conditions” might matter.)

Students learned about isosceles triangles, and the relationships between side-lengths and angles, and so forth.

20 of the students studied in a “traditional way“: reading from the book.

20 studied by watching a teacher use software to manipulate angles and lengths of sides.

And, 20 studied by using that software themselves. That is: they moved their own hands.

Researchers wanted to know:

Did these groups differ when tested on similar (nearly identical) triangle problems?

Did they differ when tested on somewhat different problems?

And, did they rate their mental effort differently?

In other words: did seeing movement help students learn better? Did performing the movement themselves help?

The Envelope, Please

The software clearly helped. The actual movement sort-of helped.

Students who interacted with the software themselves, and those who watched the teachers do so, did better on all the triangle problems. (Compared — that is — to students who learned the traditional way.)

And, they said it took less mental effort to answer the questions.

HOWEVER:

Students who used the software themselves did no better than the students who watched the teachers use it. (Well: they did better on the nearly identical problems, but not the newer problems that we care more about.)

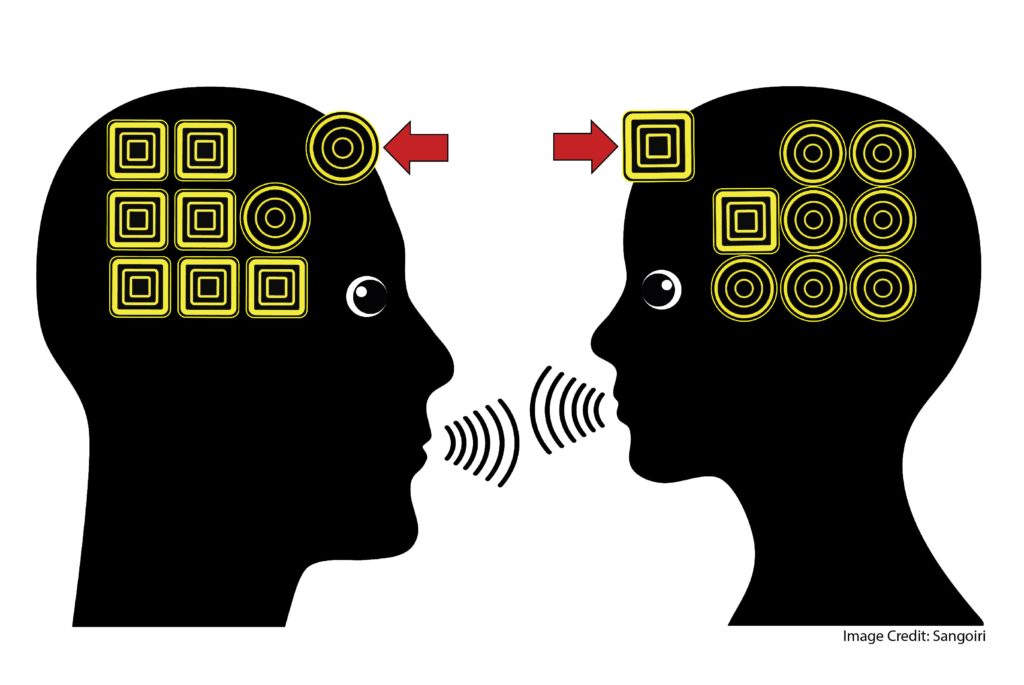

In other words: movement helped these students learn this material — but it didn’t really matter if they moved themselves, or if they watched someone else move.

The Bigger Picture

Honestly: research into embodied cognition could someday prove to make a big difference in schools.

Once we’ve done enough of these studies — it might be dozens, it might be hundreds — we’ll have a clearer picture explaining which movements help which students learn what material.

For the time being, we should watch this space. And — fingers crossed — within the next 5 years we’ll have an Embodied Cognition conference at Learning and the Brain.

Until then: be wise and cautious, and use your instincts. Yes, sometimes movement might help. But don’t get carried away by dramatic promises. We need more facts before we draw strong conclusions.

Bokosmaty, S., Mavilidi, M. F., & Paas, F. (2017). Making versus observing manipulations of geometric properties of triangles to learn geometry using dynamic geometry software. Computers & Education, 113, 313-326.

About Andrew Watson

About Andrew Watson